Google Kandinsky

Kandinsky lets you make music through art. Users doodle, scribble or draw on a web canvas and hit play to listen to their art work. Some…

Kandinsky lets you make music through art. Users doodle, scribble or draw on a web canvas and hit play to listen to their art work. Some shapes even smile and sing back at you.

This article describes the tech behind the experience. It was a collaboration with our friends at Google Creative Lab where we explored a bunch of different possibilities by sketching through code. Check it out at Chrome Music Experiments.

We started using HTML5 canvas to draw lines every frame, which was too slow. The second iteration used WebGL and turned lines into geometry by triangulation, which worked and was fast but gave us limited control over the look of the line. Our final build uses WebGL to draw lines that are calculated and rendered on the GPU, resulting in a very smooth, dynamic and addictive experience.

WebGL Line drawing

In regular drawing applications, the canvas is stored as pixel data and the pixels are manipulated for every stroke. For Kandinsky however, each line is stored as a separate mesh, made up of a series of points and attached to a WebGL scene.

Below you can see a wireframe example of the resulting mesh.

In this example, the width of the line is constant, however the major benefit of storing the line as a mesh is that each vertex is editable.

Below you can see the widths being manipulated: the velocity of the drawing is used to make the line thicker or thinner; on top of this, as the line is completed, the width is animated with some compound sine curves — creating a wobbly effect.

Because we are rendering a series of points on the GPU, we had the ability to re-draw or erase, which enabled us to animate the lines and create this unique undo effect.

Using a mesh attached to WebGL gave us flexibility to make the lines more interactive; they feel alive on the page and much more engaging than a static image or pre-rendered video.

Multi-threaded physics animations

A big challenge in calculating a large number of interactive objects at once is trying to render the animations consistently at 60fps on every supported device. We handled this by moving the physics math off of the main thread into a WebWorker.

Inside the WebWorker, we maintain the state of each shape in the form of a Vector object with X and Y co-ordinates for each point that is drawn as part of the shape.

When a shape plays a sound, the points are scaled up and snap back to their original position with a spring physics calculation. While it’s animating, the co-ordinates are all flattened into a Float32Array which is passed back to the main thread at lightning speed thanks to transferable objects in WebWorkers. Once back to the main thread, the geometry of the line is updated with new data - ready to draw on the next animation frame!

Gesture recognition

When the user draws a circle or triangle, the program recognizes the shape and applies a unique sound and effect.

We used a library called Dollar Gesture Recognizer to achieve this. A bunch of sample gestures for each shape in each direction are stored and then called for comparison.

As a user draws his line, the line is simplified, compared against the library samples, and then smoothed to create the final form. Comparing the simplified line shaved many milliseconds from the total computation time.

The recognition is still not 100% accurate, however for a fun little app like this, it’s perfectly suited.

Playback timing

Some pre-calculations make it as easy as possible to create nice-sounding compositions.

The sounds that were chosen for each shape and scheme were all notes taken from a single scale. Therefore when a user draws shapes in different areas of the screen, varying notes make coherent chords and melodies. The different shape’s sounds were also composed to work well together.

The timing of each beat was forced to conform to a specific rhythm. The length of the beat was determined by the amount of shapes across the screen. Therefore each sound seems to play at the right time, and the user doesn’t have to worry about positioning their drawings on the canvas.

Shapes close enough to each other going left-to-right are played at the same time to create chords, and to help keep the different sounds in rhythm.

Facial animation

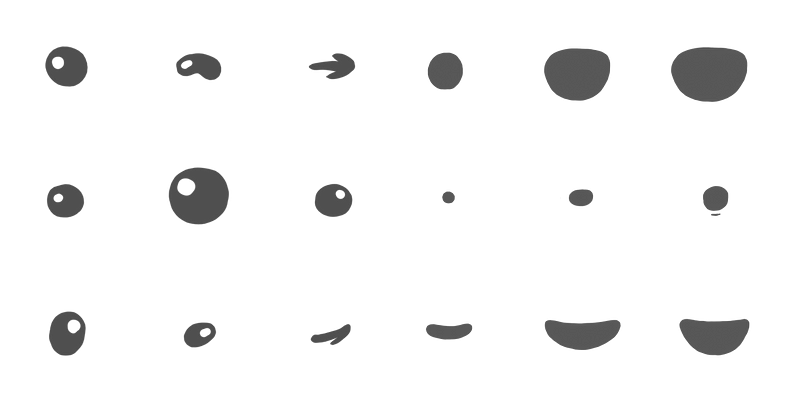

To make the app more playful, each circle is decorated with eyes that blink and a mouth that sings.

These elements were added into the physics simulation so that they interacted in the same way as the surrounding line.

The animations were made using a sprite sheet of different eye and mouth shapes, switching between them to create the different effects.

In order to apply the correct color to the eyes, a shader multiplies the input eye shape against the selected color. This value could then be animated when toggling between colors.

Conclusion

This project is definitely an Active Theory all time favorite, the concept is simple and fun (thanks Creative Lab) and is the perfect example of using complex technology to create an experience that requires little to no instruction. People just dive in and start making music. It took the form of one prototype or another over the last two years, and we’re ecstatic to be able to finally share it.