Mira & Future Web

View the project: Web / iOS / Android

Since Active Theory’s beginnings in 2012, we’ve been developing a JavaScript framework, Hydra, in order to allow us to efficiently create projects that are expressive, animated, and run well on every platform using the web. It’s a tool that has grown with us and evolved into the core of our studio.

In using Hydra, each of our developers work within a similar code structure. This enables contributions from the entire team, working towards harnessing the vast capabilities of HTML5, from URL routing to WebGL and beyond.

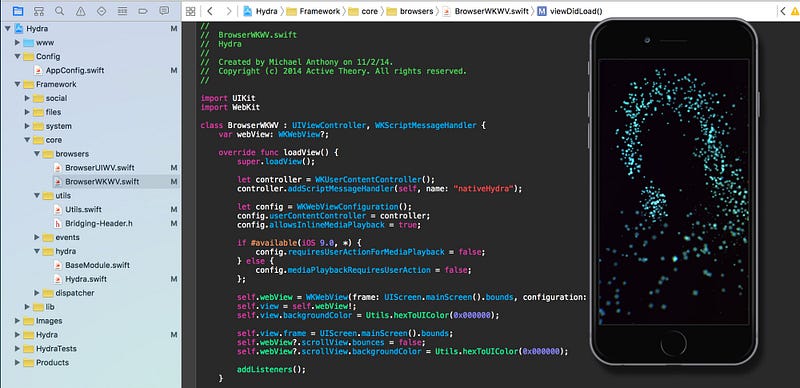

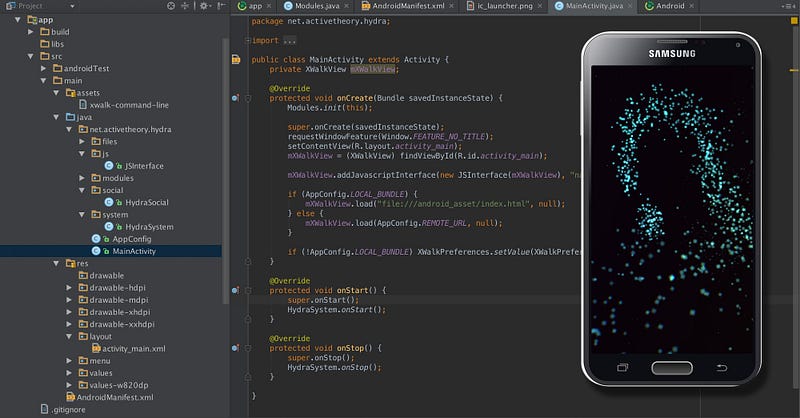

Extending to other platforms

In 2014, we began developing native applications for iOS and Android using Hydra. These applications are essentially fullscreen web-sites that communicate with the native code on the device in order to access hardware that isn’t normally available — therefore extending the web. Features such as Bluetooth, iBeacons, Apple Pay, and Touch ID are all now available to Hydra.

We love the direction the web is heading, and believe that there are still so many capabilities that lay untouched. With the experience and tools we’ve developed over the past few years, we can confidently create user-experiences and applications in which the underlying technology — be it native or through JavaScript — matters little to the end-user; the animations will delight and the performance will be seamless.

We’ve since taken the platform even further, extending our reach to desktop applications — most recently for physical installations. This also includes Apple TV, and cutting edge tech such as WebVR.

The most important feature of this platform is that the application code is written in one single JavaScript codebase. That means only one developer or team is needed in order to develop the application across all platforms.

In contrast with Responsive Design, which works best for static content layouts, we take an Adaptive approach, enabling us to create more robust and dynamic experiences. This means that we harness all of the information available to us about a user’s device and actions, and adapt our design accordingly. When we write project code, we can reference this information to make decisions at run-time about which code to execute, based on screen-size, hardware capabilities, performance, and more. With this already being applied, it was trivial to extend this practice to also include mobile platforms, physical installations, or Virtual Reality.

The Mira Concept

In order to showcase the framework’s capabilities, we wanted to put together a simple application that adapted to each possible platform, all while using a single JavaScript codebase.

Some of the client work we had done in the past few years had really challenged us toward incorporating particles using WebGL, and our tools had evolved accordingly. The concept we arrived at was a very simple meditative experience using particles, color, and sound to encourage relaxation.

Creating the Particle Sim

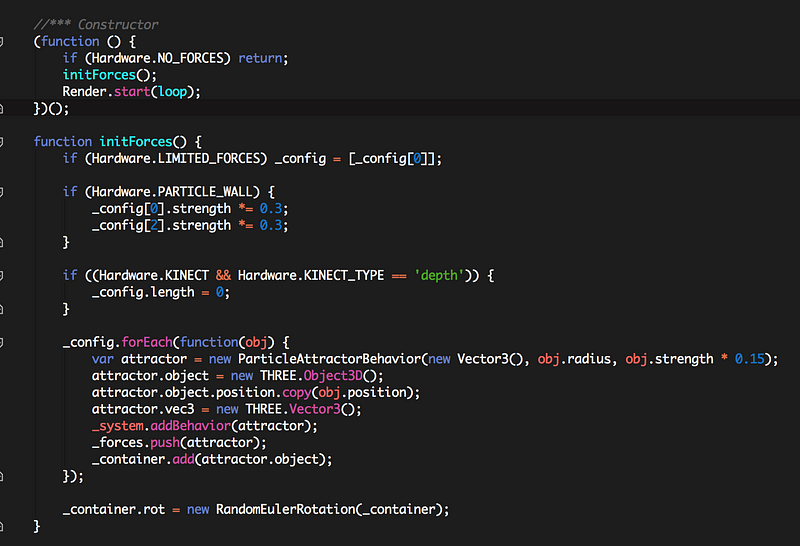

One of the features in Hydra is a particle physics simulation tool that allows us to create complex particle behaviors by breaking them down into simpler functions in code.

The approach to the behavior of the particles is fairly straightforward: when a user moves their mouse or finger, we check the movement’s velocity and spawn a particle with that value attached, sending it moving in the same direction.

Past creation, the particle lives at the whim of a series of attraction and repulsion forces that push and pull it depending on their relative position. This creates a hypnotizing dance of particles that eventually fade away with time.

For rendering, we used our go-to 3D engine, ThreeJS. We’ve done many projects using this library and have created extensions for Hydra that make tasks even easier during development such as turning a 2D mouse or touch position into 3D world space.

For a final touch, we used our real time post-processing engine called Nuke to add a slight chromatic aberration and depth of field to the particles.

Mobile Apps

Even though the project was developed on Google Chrome for desktop, Hydra aids the development process by automatically accounting for a whole range of things such as mouse movement on desktop vs touch movement on devices. From the very first time we tested on a phone, the experience worked great with touch.

It took less than an hour to get the native apps set up and running on iOS and Android. To take it further, we added support for multi-touch so you can create multiple streams of particles using more fingers.

Similarly to how we develop desktop and mobile web experiences, we factored in things like device capability and performance to fine-tune the experience and make sure it runs flawlessly on a wide range of devices that we have in our office. This results in things such as reducing particle count, removing post-processing effects, or simplifying the complexity of math calculations.

Apple TV

The exciting part of the new Apple TV product is that it can run third party apps. Since tvOS doesn’t have a built in web browser, we utilized Ejecta, which is a really interesting project that converts WebGL commands in JavaScript to OpenGL commands in native code. The majority of the work involved was creating a workflow in order to merge Hydra with Ejecta for easy and fun coding. Beyond that, we were up and running within a few hours.

Controlling Apple TV with iPhone

Since everything had been coming together quickly, we had some time to explore some new native functionality. It definitely seems like a useful feature to be able to control an Apple TV app with an iPhone so we wrote some native code that uses Apple’s Bonjour protocol to help the phone and TV discover each other, allowing them to communicate over a shared WebSocket connection.

VR

One of the most exciting new technologies, VR, is emerging in the browser. Because our platform allows us to write code so quickly, it took just a few hours to create a build for VR that uses webcam to control particles and render the user inside of the particle world.

Kinect Installation

Similar to VR, we created a build to test on a projector. Utilizing Electron we were able to hook into the Kinect API on Windows and use depth data to make the particles take the shape of a person and project it onto the back of the windows in our office.

Conclusion

To wrap up, we created the majority of this experiment in about a week and are extremely happy with the result. The web platform itself is immensely powerful and runs in so many places that, with a bit of modern thinking and technique, we are able to create experiences that truly take the web outside of the web.