NASA Rover

Case study

Working with NASA’s Jet Propulsion Lab, we’re thrilled to introduce the Open Source Rover digital experience. JPL has created a small-scale, fully-drivable version of the Mars Rover that can be built at home or in schools using commercially-available, off-the-shelf parts. Our role? To help JPL promote this ground-breaking release of elite technology through an interactive web experience.

UX and Design

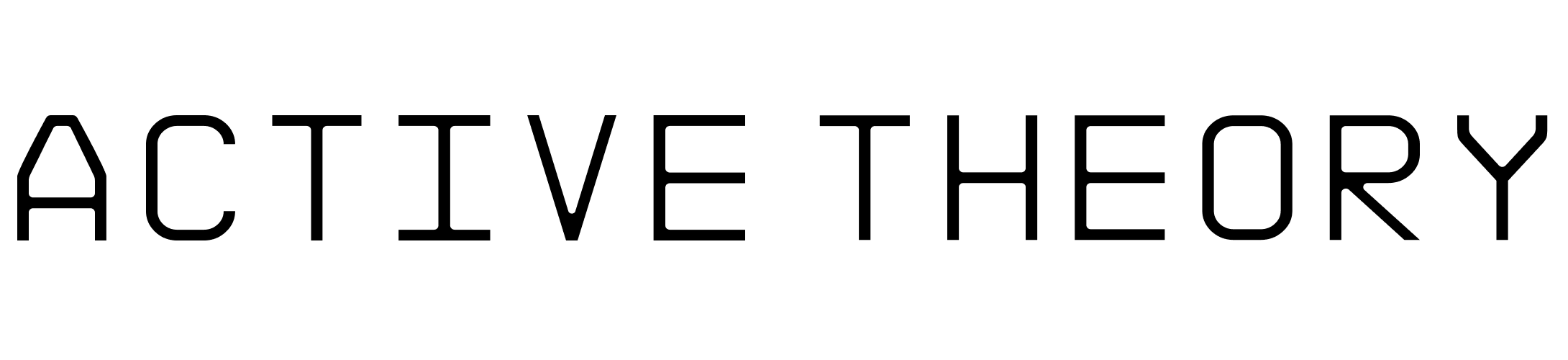

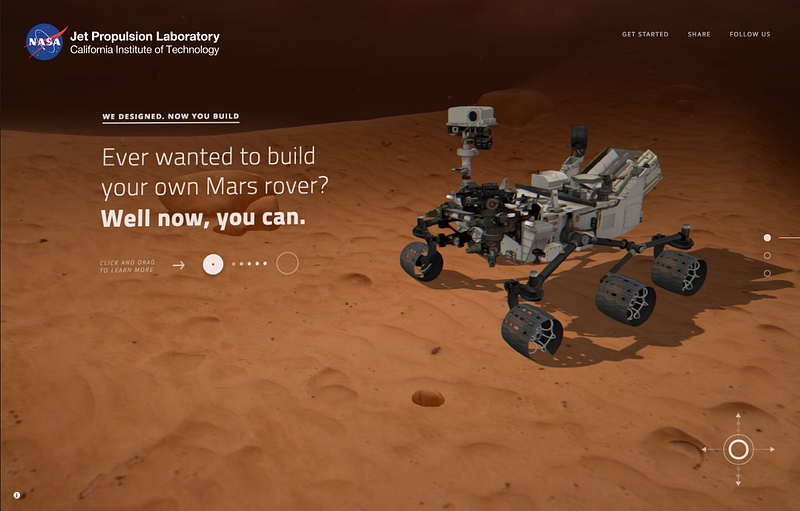

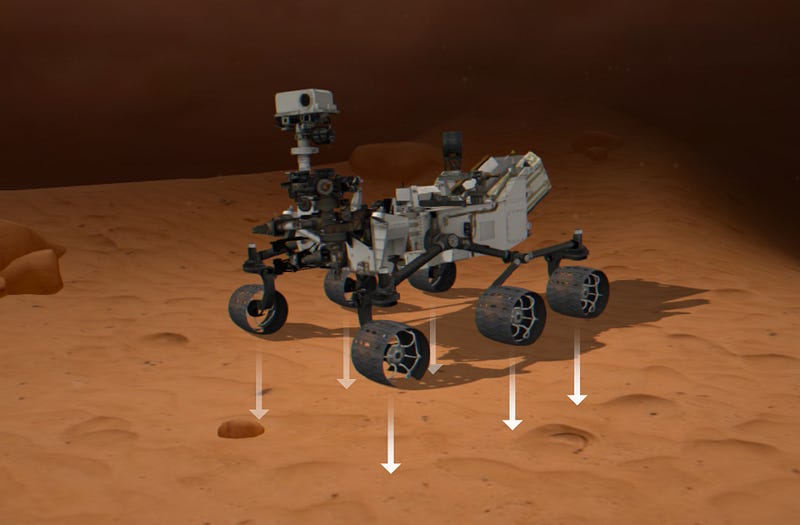

From the very beginning, we knew it was important to convey how much the design of the Open Source Rover was influenced by the actual Mars Rover. With this in mind, we decided it would be engaging and informative to drive both rovers, experiencing the similarities of the rovers first hand through driving mechanics and an artistic transition.

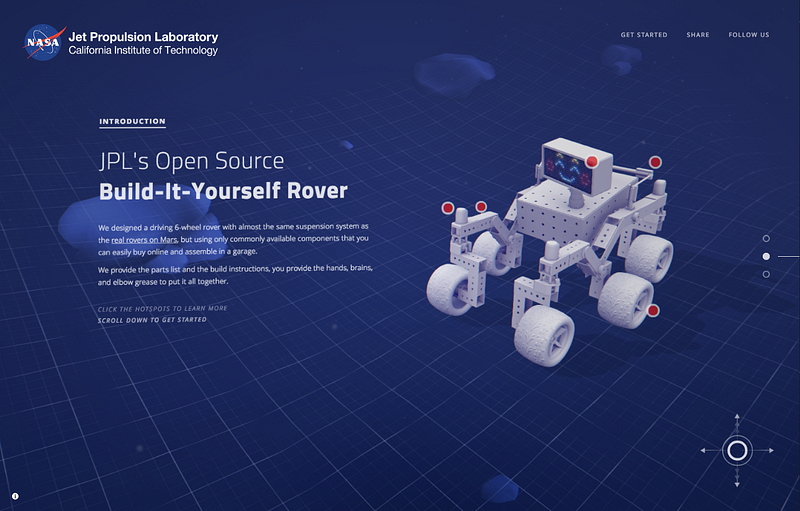

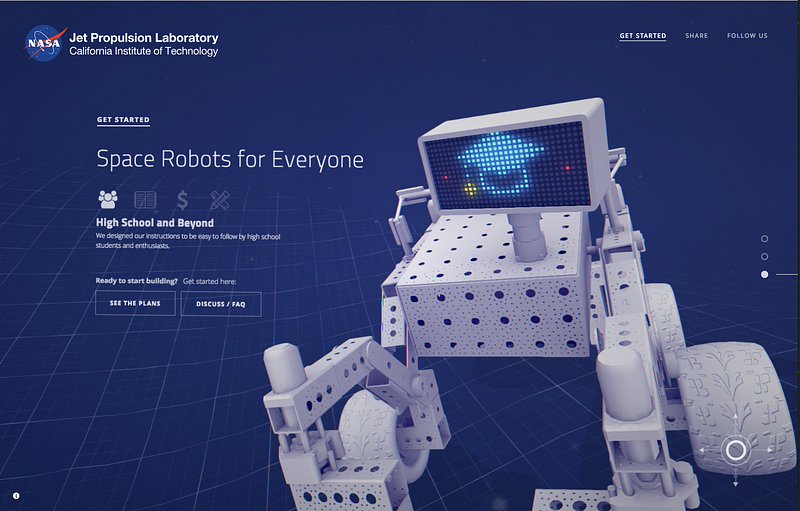

When rendering the Mars Rover, we used realistic lighting and textures to attempt to re-create a feeling of realism. The Open Source Rover, on the other hand, was rendered using Ambient Occlusion and placed within a wireframe blue-print inspired environment.

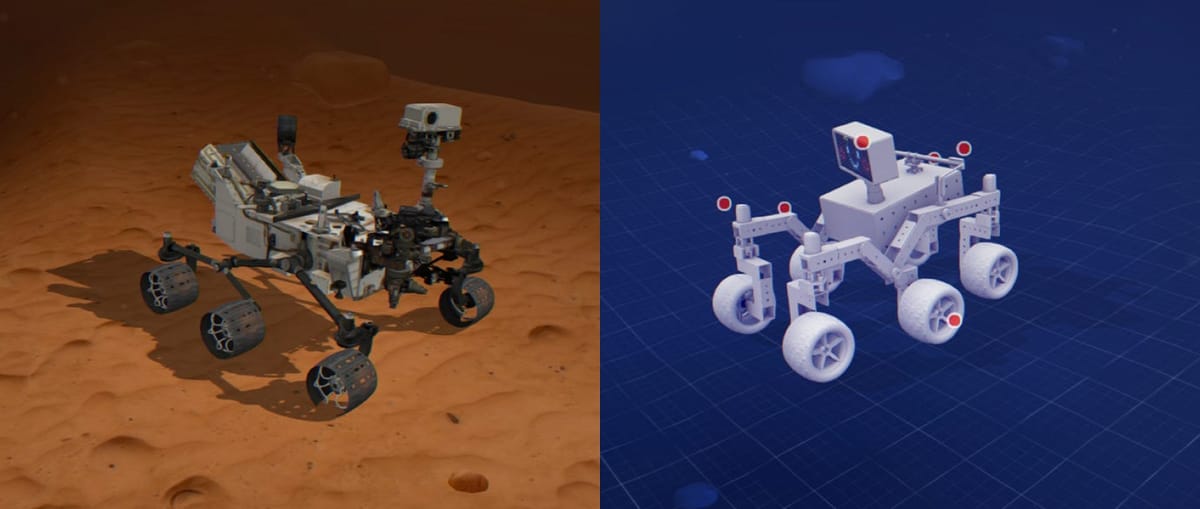

Above: Our initial design comps, which ended up very similar to the final output.

We went through two rounds of design (producing the screens above), before jumping into development — and were given a lot of creative freedom from the awesome JPL team.

Developing The Experience

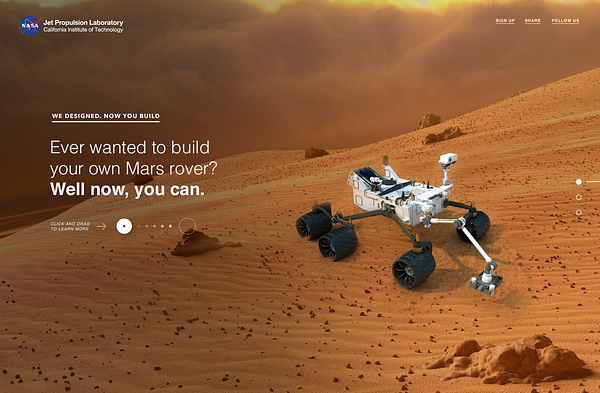

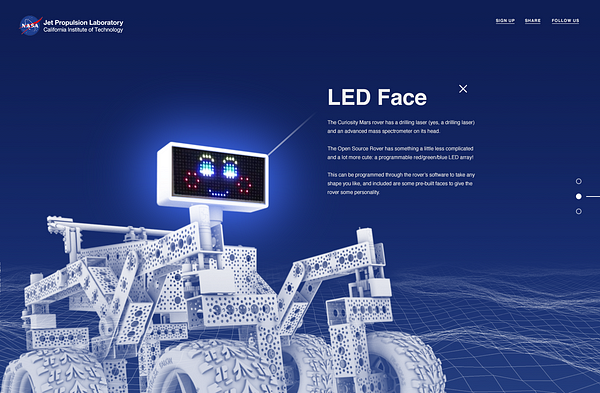

To leverage existing awareness of the Mars Rover program, the headline experience involves driving the rovers around an infinite terrain, highlighting how they maneuver obstacles interactively — using either keyboard controls, a mouse, or even a gamepad.

With a graphical transition, the Mars Rover transforms into JPL’s Open Source Rover, reinforcing the connection between the two — which share various common features, notably the Rocker-bogie suspension system.

Start to finish, the product is a hands-on learning experience. By encouraging the user to go for a drive, key mobility features of the rover (suspension, pivoting and corner steering) are demonstrated in action.

If a deeper dive is needed, users can zoom into predefined hotspots, access the plans via GitHub, or join the conversation on the Open Source Rover discussion board.

Bringing The Rovers To The Web

Upon commencement of the project, we knew that one of the main hurdles was going to be taking precise, heavy, CAD files of the Rover, and converting them into something suitable for real-time rendering. This isn’t a new problem for us — but each time we encounter it there is always something unique to overcome.

Luckily for us, the Mars Rover, Curiosity, already exists in a real-time-appropriate format, cutting our work down significantly. However, the Open Source Rover, when converted from it’s CAD origins to the simplest format, still consisted of many millions of polygons.

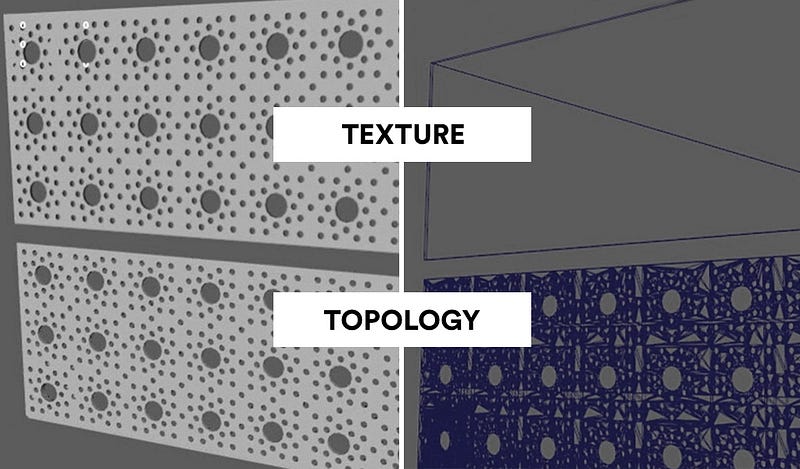

We were able to bring it down to around 12,000 triangles by remodeling everything from scratch in Maya. The most significant savings were made with the pre-drilled holes in the casing, which saved millions of polygons. For these areas, which are predominantly flat with holes drilled into them, we carefully incorporated an alpha-test texture, which could be applied in the model’s shader and prevent the GPU from rendering certain regions. While not a one-to-one solution compared to actual geometry, you can see below that it’s convincing enough.

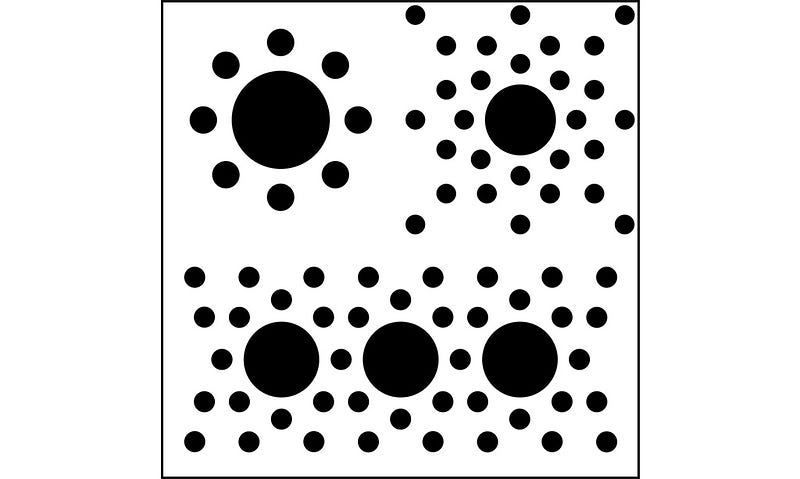

On realizing there were several different layouts of holes for different sections of the casing, we created templates for each pattern, and merged them into one single texture below. This made the UV layout of the model more complicated — having to repeat only a section of the texture — but allowed us to reduce the number of draw calls by only having one texture, which was used for the entire rover.

The benefit of using an alpha-test (discarding certain pixels entirely) instead of transparency was great, as it lets us avoid the complication of transparency sorting. With so many overlapping parts, this was by far the easiest solution, and easy more-than-often also equals more efficient.

Convincing Movement

The next major hurdle was animating the rovers realistically, which wasn’t as straight forward as say, a car. However, as the Open Source Rover had already been built by NASA Engineers, we were able to get some great reference and explanations.

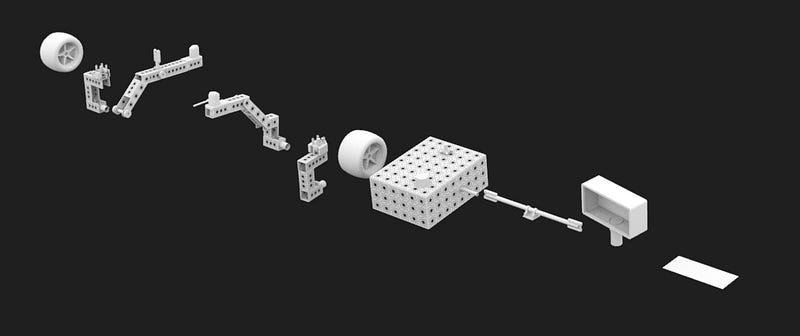

As this was a hard-surface model — rather than something organic like a human character — we went with the approach of separating the object into the different moving parts, rather than rigging it with bones and adding more complexity and data to load.

The rover consisted of 11 different sections, each exported relative to their unique rotation axis. We then arranged this upon loading into the complete rover hierarchy.

The movement was all initiated from the Rocker-bogie suspension system, which is an arrangement of parts that allows all six wheels of the rover to always be in contact with the ground, improving stability. With this is mind, we decided to procedurally generate the animation from the ground-up, literally.

Starting with positioning the six wheels, we shot six rays, one from each wheel, to find the height it collided with the terrain. We then used these height values as inputs to help us calculate the necessary rotations for each section.

Instead of calculating a complete inverse-kinematics system, we applied some rather simple trigonometry rules that gave an imperfect, yet convincing motion. We started with one rocker-bogie (one side of the rover), and then duplicated and calculated the rotation between the two.

Although this worked, we started to see performance dropping considerably. The reason for this was the six ray-casts into the terrain. The amount of polygons on the terrain (~24,000) created too many calculations to maintain rendering at 60fps, or even 30fps! Even when shifting this heavy-lifting to a separate thread, the animation was still jumpy, waiting for the next set of ray collision data to come back.

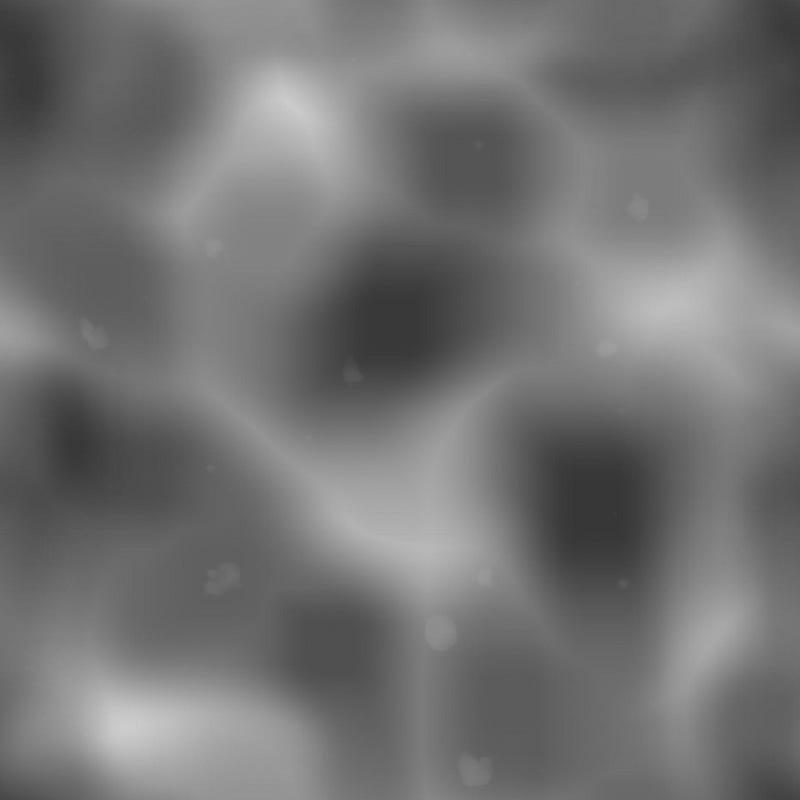

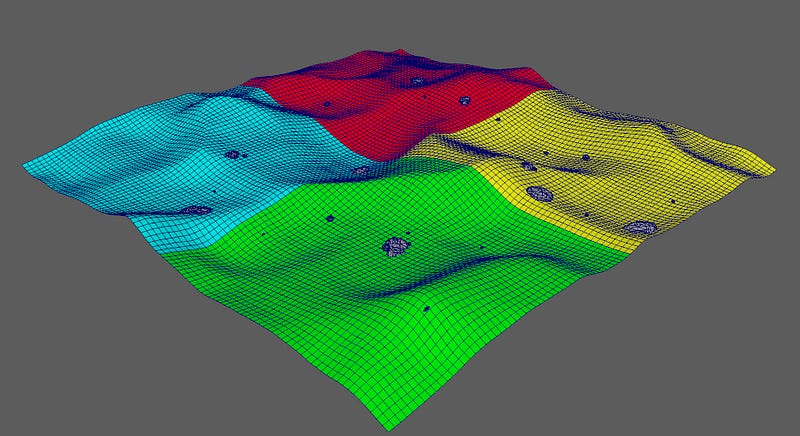

We solved this issue by instead pre-generating a height map texture of the terrain tile, as seen below. This was baked from Maya, outputting the difference in height between a flat plane (whiter = higher terrain).

The texture’s pixel data was stored in CPU memory, and using the rover’s location on the terrain, we could derive which pixel to look up for each wheel to get the height value.

We finally performed 4 pixel lookups for each wheel. By including neighboring pixels, we then eased between the values to get a smooth transition as the Rover moves around, avoiding harsh stepping between pixel values.

This technique performed beyond expectations, bringing our frame rate back to 60fps, with plenty to spare. It also had the added benefit of letting us bake other objects, such as rocks, into the height map as well, at no extra cost.

Infinite Exploration

Another interesting challenge was creating infinite terrain, allowing the user to explore and not feel constricted to an area with boundaries.

This is something that we have covered in the past, in our work with Google and Pottermore. However, for this specific use-case, we were able to make it even more efficient, while also reducing the amount of geometry rendered.

The solution was to create just one repeatable tile (paying attention to make sure the normals are also repeatable). We then split this tile into 4 quadrants as it is loaded (illustrated below). We assigned an index attribute to each vertex in the tile (0 to 3), to be referred to later on.

By splitting into quadrants, we reduced the amount of geometry rendered by least 3 times, as the alternative option would be usually to duplicate the entire tile for when the user passes over the tile boundaries — so when a user crosses a corner, 3 tiles would need to be rendered to avoid having visible edges in the scene.

The other improvement we made was to perform the quadrant placement in the vertex shader, and not on the CPU, which is how we’ve done it in the past. This is much more efficient as it reduces the amount of data needing to be passed between the CPU and GPU, which is where bottlenecks are created. Below you can see this in action.

In order to keep the tile geometry a reasonable file-size, we made the scene quite foggy, so that we could reduce the draw distance. This also matched the visual style of the experience.

Conclusion

We were over the moon to be able to work with NASA JPL, and this project proved to be extremely fun and challenging — just the kind of work that keeps us excited.

The Open Source Rover experience is accessible via JPL’s website.