Netflix Our Planet

Netflix FYSEE 2019

Netflix FYSEE (short for ‘For Your Consideration’) is Netflix’s yearly event that combines discussion panels, screenings and installation pop-up experiences to advertise their original programming for the upcoming Emmy Award season.

This year, we worked alongside We’re Magnetic, Plan8 and Gamma Fabrication to create an interactive experience for ‘Our Planet’, a nature documentary narrated by David Attenborough.

The Story of Our Planet

‘Our Planet’ is a stunning eight part series that explores the wonders of our natural world. The show, which showcases the diversity of life on earth, took four years to shoot, with over 600 crew members filming in 50 countries.

With so much captivating visual content to leverage, we were tasked with creating an exciting and innovative interactive pop-up activation that leans on the visuals as the key storytelling piece.

The Concept

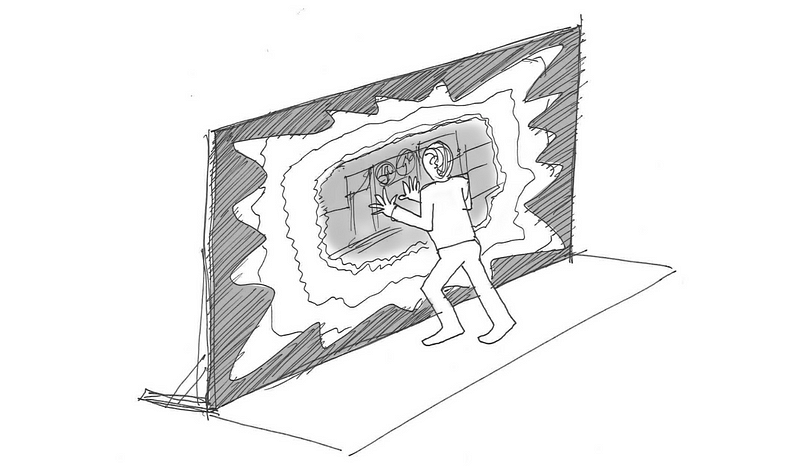

In order to showcase the Our Planet content, we wanted an execution that would display the footage on a large screen. Given we had control over the lighting and setup of the space, we decided to project onto a stretchy fabric screen. With this technique, users would be able to go up to the screen and push into the fabric which would influence the footage being projected.

Once we had decided on the technology, we needed to determine how to best use the footage in changing scenarios; when users weren’t pushing, when users are pushing, and when multiple users are pushing.

The story we wanted to tell involved playing panning footage of different environments and encouraging users to push into the fabric to learn about what’s going on in those environments. The user flow below was an early exploration of this:

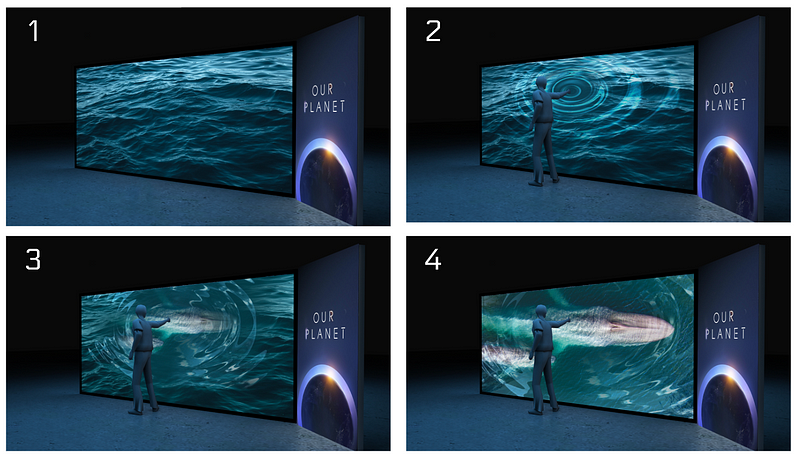

- When no one interacts, the projection shows panning shots of the ocean

- A user pushes into the fabric, creating ripples in the water

- A whale begins to emerge as the user pushes in deeper

- When a depth threshold is reached, the whale footage takes over the screen

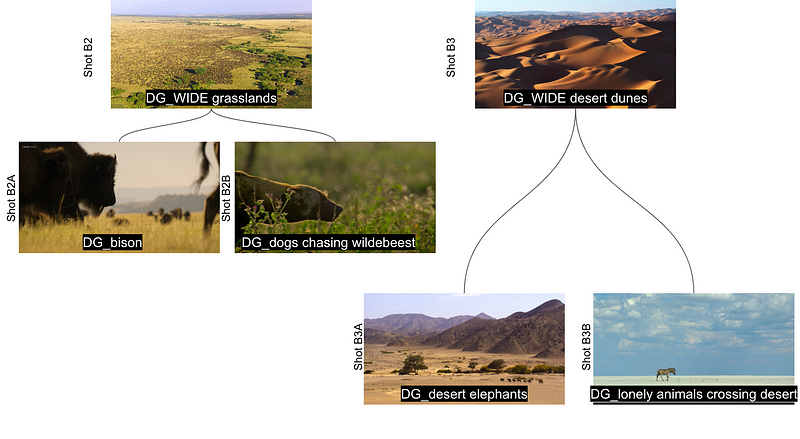

With this core interaction established, we next needed to work out the flow of the entire experience. Alongside We’re Magnetic, we were able to source panning clips of nine different environments that users could engage with.

After the intro played, the experience would randomly cycle through the nine different environments, with 2–3 clips of each environment playing before cycling to the next environment.

For a user, walking up to the screen and pushing would dive them into the environment — for example (as shown in the image below) pushing while on the ‘DG_WIDE_grasslands’ clips would transition them into the ‘DG_bison’ or ‘DG_dogs_chasing_wildebeest’ scenes.

Content and Transitions Exploration

In order to dive the user into environments, we needed to find a transition that looked natural and behaved according to user expectations.

Initially, we worked on a circle concept that mirrored the eclipse style of the opening of the show.

After putting together a few motion tests and playing with an early build, we decided to move away from the circle, and instead attempted to preserve the organic shapes and movements created by a hand pushing on the fabric. This approach was more appropriate given show theme, and felt more natural and responsive for users interacting.

Early motion comps exploring the circle transition.

Another test involved applying tactile effects to give feedback to the user interacting. We tried a fluid simulation to highlight the trail left by a hand on the fabric. We eventually moved away from this as it was found to distort the videos slightly — something we wanted to avoid when showcasing the Netflix footage.

The Physical Setup

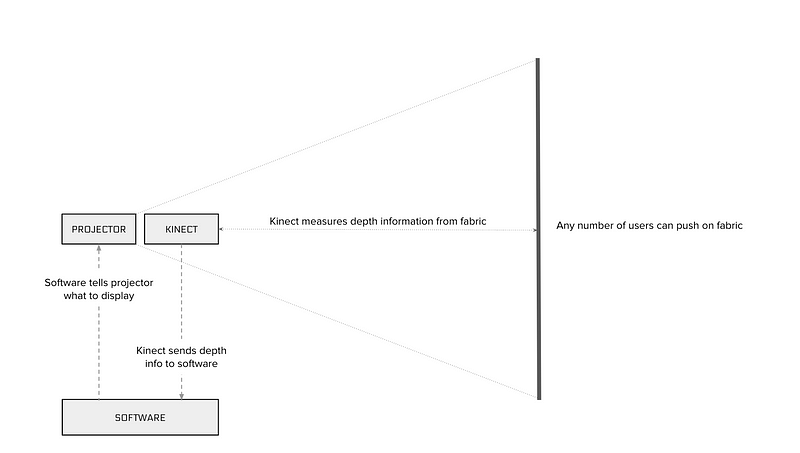

Working alongside Gamma Fabrication, we put together a plan for the hardware setup needed. This involved using a Kinect to measure depth data from fabric pushes, a software build interpreting this info, and a projector serving video content and transitions accordingly.

Throughout the project we worked off two hardware setups, an office prototype (left image below) and the full-scale version on site at Raleigh Studios in LA.

A prototype provided by Gamma for the AT Office (left) and a concept diagram for the hardware setup (right).

Kinect Depth Data

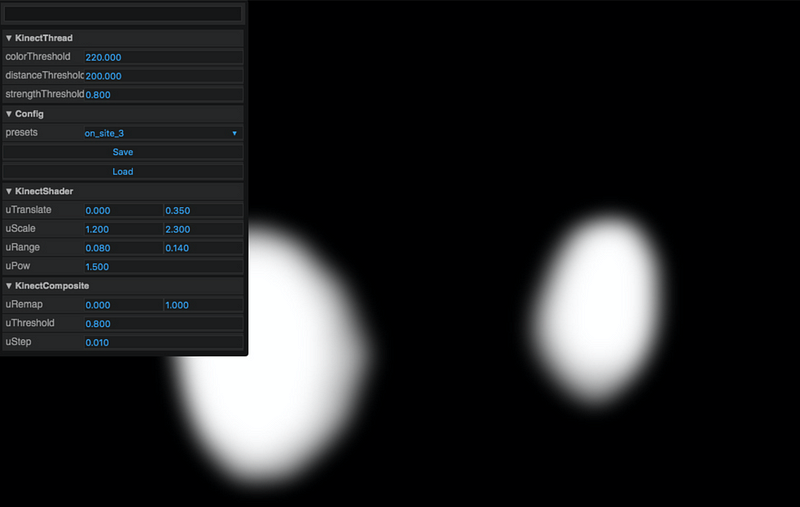

In order to process user interactions from pushing the stretch fabric, we used a Kinect. The Kinect would send depth data as an image containing only a red channel ranging from 0 to 255 (or 0 to 100%). The closer the object to the Kinect (e.g. when a user pushes into the fabric), the darker the image. The further the object (e.g. when no one is pushing), the redder the image. As this data is image based, we used image processing with shaders to interpret the interaction and then serve videos and transitions accordingly.

This approach came with a few challenges. The image was low resolution and noisy, and the field of view included unnecessary items such as the hardware holding up the frame. Also, while the Kinect was positioned 1.5 meters behind the screen, users would only push in around 30cm, which would only read as a small fluctuation in the depth data, making initial builds very sensitive and requiring a lot of refinement.

To address this, the first step was to crop the Kinect texture around the screen to remove useless pixels — making the whole image represent the canvas. Different high-pass filters (blur) were then applied to smooth the image and reduce noise by averaging neighboring pixels.

Dynamic levels were also applied to change the color range so that instead of representing depth values of a whole room it only represents depth values of a few centimeters. Once calibrated this would make the image fully red screen level (no interaction) and fully black when a user is pushing in fully (~30cm).

Having prepared the image data, the resulting texture was then sent to a thread. Using computer vision, we implemented a blob detection algorithm to determine hands coordinates, velocity and strength (how far into the fabric they are). Our final output consisted of a clean depth map texture (black and white texture), and an array of “touches” (coordinates, velocity and strength) for every touch on the screen. The clean depth map was used to apply shaders on the screen for creating borders, trails, and other effects. The touch array helped trigger 3D sounds (which were provided by our friends at Plan8) with different intensities, giving feedback to the user.

To allow for quick iteration, we used our internal Hydra GUI toolkit to build editable controls for each parameter of the Kinect calibration (e.g. crop, levels, blur settings, depth range). These were saved into different presets, allowing us to work in different environments as we went between the office prototype and the live experience. Finally, we built a system to record image sequences from the Kinect and pass them back to our system to preview, iterate and change settings on the fly without the need to be on-site.

Live at FYSEE

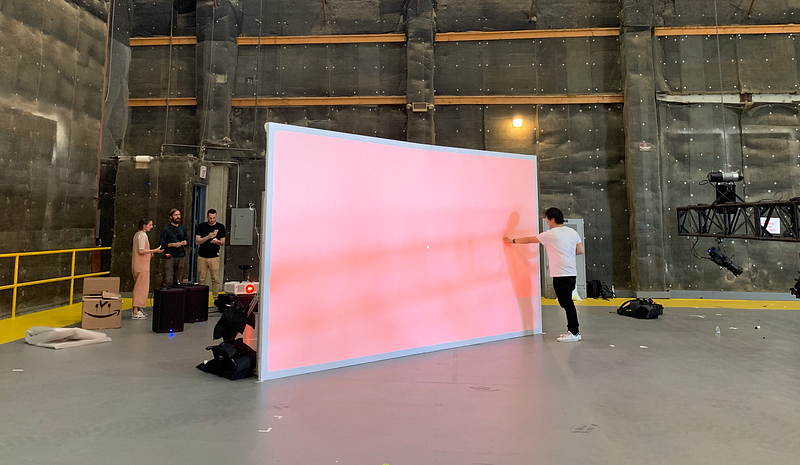

On May 5 2019 the project went live at Raleigh Studios in LA. The exhibit was available in the activations area alongside 15+ other exhibits from notable Netflix originals like Narcos, House of Cards and Ozark. It’s live until June 9.

Attendees of the FYSEE event interacting with the Our Planet installation.

Future uses of the technology

The Our Planet install was the first project in which we used a flexible fabric screen as the center-piece of the campaign.

While installing the tech onsite, we also put some quick experiments to explore different possibilities of the technology. Beyond being just visually captivating, we found it genuinely fun and addictive to push into the fabric and getting immediate tactile feedback.

There’s lots of room to explore creative applications of this tech. Art exhibitions, music festival and tech conferences are perfect environments to engage a creative crowd — and we look forward to continuing to experiment with this in the future.

Technical experiments to showcase some of the future possibilities of this technology.