Sundance New Frontier

The Sundance Film Festival team approached us last fall with the opportunity to collaborate on their upcoming New Frontier experience…

The Sundance Film Festival team approached us last fall with the opportunity to collaborate on their upcoming New Frontier experience. Launched in 2007, New Frontier is their annual program that fosters independent artists and creative technologists in exploring new media, immersive design, transmedia activism and more.

We had already launched a range of digital events on our Dreamwave Platform throughout the year and had incorporated many of the features and ideas Sundance was looking for, so we felt confident partnering with the team on New Frontier (NF).

Our First VR Event

While we’d previously explored 3D environments, avatars, and rooms with Dreamwave events like Secret Sky and Hulu PrideFest, the New Frontier experience marked the first time we ideated with VR from the very start.

While we had to keep VR UX in mind with every creative decision, this decision also opened up new interaction possibilities like hand tracking, proximity-based chat between attendees, and cross-device multiplayer between web and VR.

The Original Idea: Superzoom

With video conferencing being so prevalent in 2020, the Sundance team started ideating around how we could take a Zoom-like experience, and make it creative and engaging. The Zoom concept was based on the idea of wanting to meet audiences where they live — a mantra of the NF exhibition design. The video conference interface served as the experience’s menu, displaying everything attendees could then explore (like live content, social rooms, and the cinema).

While this neatly acknowledged the WFH realities of the pandemic, the conference interface presented a UX problem: all menu items needed to be evenly represented. This didn’t feel like an optimal approach, as clicking some tiles would take attendees to vast 3D environments whereas others would simply link out to another site.

Though we decided to move away from ‘Superzoom’ as our core concept, we still wanted to explore the idea of creating different environments, such as a film party and a cinema.

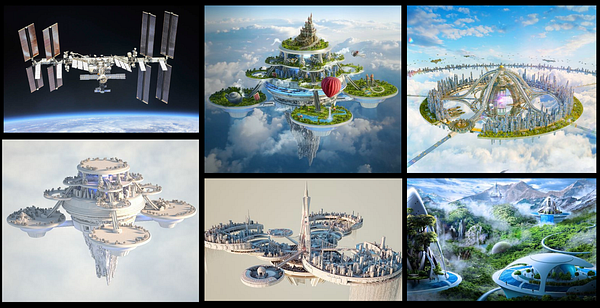

Inspiration for 3D environments users could socialise and view films in

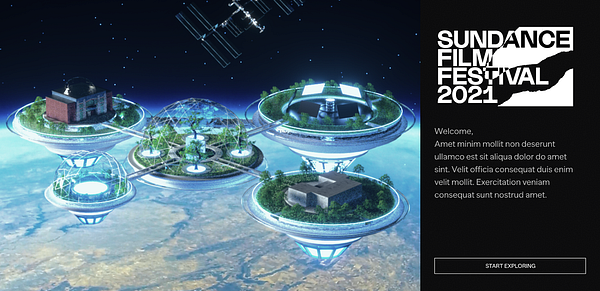

Before Space: A Satellite Venue

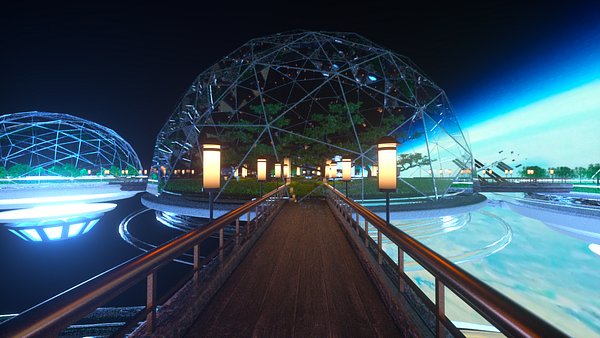

Moving away from the Superzoom concept ultimately expanded our visual direction. It was proposed that the NF venue might orbit alongside the International Space Station, telling the story of how satellites and cables connect the biodigital terrain of people and their devices, and leading us to designs where the festival, quite literally, takes place in a satellite.

A floating hub in the sky was an exciting overall look and feel, but also provided flexibility around how we developed each environment.

From there, we quickly aligned on the user needs that would dictate each environment in the experience and settled on: a space garden (the home environment), a film party, art gallery, and cinema house. Defined early in the process, these spaces (or “rooms”) became the rooms in the final experience.

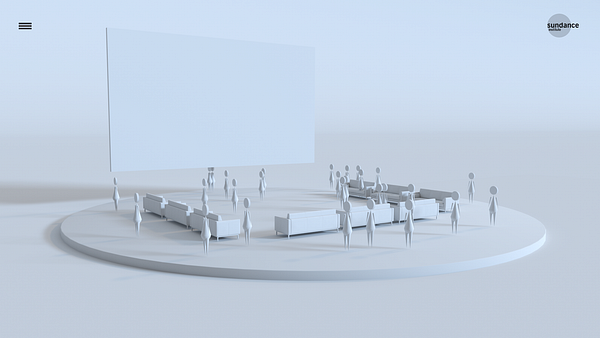

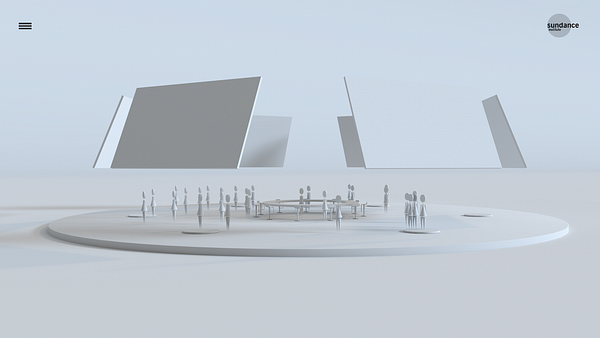

Early render experiments for the space garden (top left), gallery (top right) and cinema (bottom right).

Moving Avatars

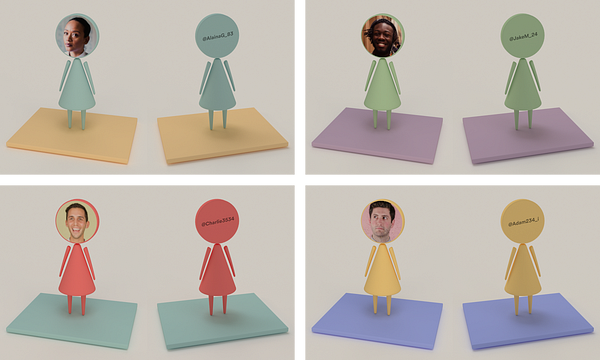

Every year, the Sundance Film Festival attracts thousands of people to Utah to watch screenings and discuss film media. We wanted to recreate that social atmosphere for this year’s virtual attendees, starting with the event’s avatars. We opted to design the avatars with human bodies and faces (rather than going for more fantastic avatars).

Leaning into the social aspect, we developed a system to give attendees the autonomy to move around the experience, whether that be within a single room or between different rooms. This not only gave the event a sense of space, but also enabled proximity-based features like chat bubbles (more on this later).

We kept the avatars cartoon-ish human forms to keep things fun and playful while also making it clear that each avatar represented a real person.

A Space-Themed Experience

The Sundance Film Festival identity was in significant flux throughout our design process. Utah (the traditional home of the festival) was planned to be a satellite venue for activations. The decision to take the NF experience into space actually didn’t have anything to do with ‘the future’ — but rather it had to do with reflecting the real time presence of the festival.

The landing visual went through several iterations in production. Though we started with a map of the satellite, we ultimately settled on a simpler view of Earth. In the final experience, users started zoomed into their geo-location — so we were quite literally meeting the user where they were — before the camera backed all the way out into space.

This transition played a vital UX link, connecting the users’ real location with the NF satellite experience and moreover, connecting life on Earth with all the creativity and innovative thinking comprising the NF experience.

Different iterations of the landing were explored throughout production.

Refining the Rooms

So we had decided upon the rooms, and we had articulated our overall visual direction. The next step was to develop the visual direction and functionality of each space.

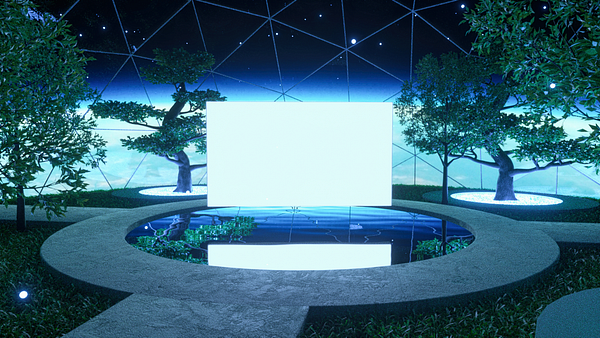

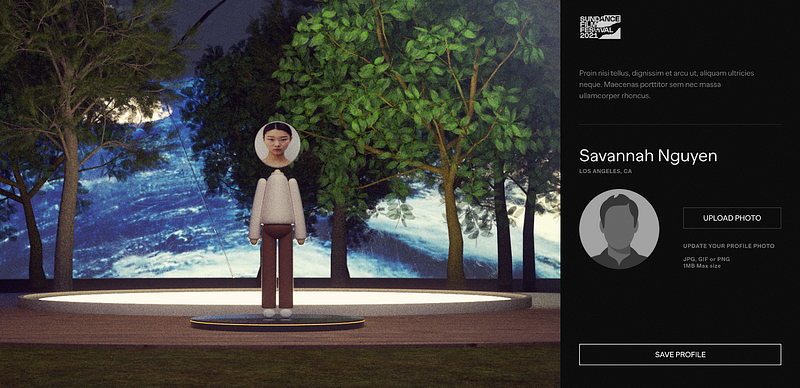

Space Garden

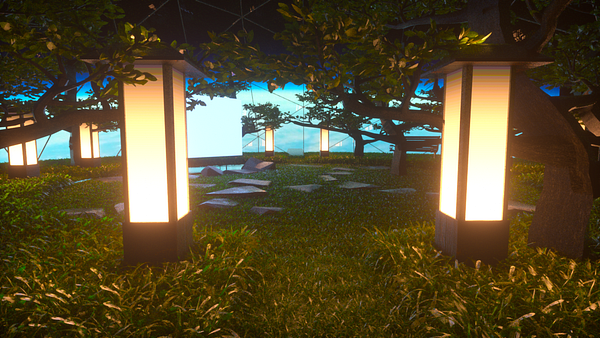

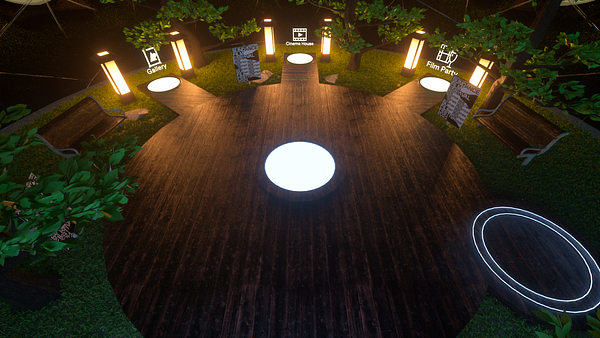

The Space Garden marked the entry point into the New Frontier experience. Because attendees would land here after creating their profile and avatar, we planned to create a welcoming and easy-to-navigate environment.

The Garden encouraged users to practice the mechanics of moving their avatars around the environment (whether they were on the web or in VR). We incorporated natural elements like trees to add a sense of biological life and warmth in the otherwise sterile space environment. By moving their avatars to embedded lights in the ground, which functioned as portals, users could travel to different rooms.

Though we initially considered having avatars walk from room to room (see top left reference), we decided it would be a little too cumbersome to have attendees walk everywhere and instead opted for teleportation.

In the final build, we added schedules on the portals’ signposts to give users information on each room’s daily events throughout the festival.

Early design explorations (top/bottom-left) and the final implementation in dev (bottom-right).

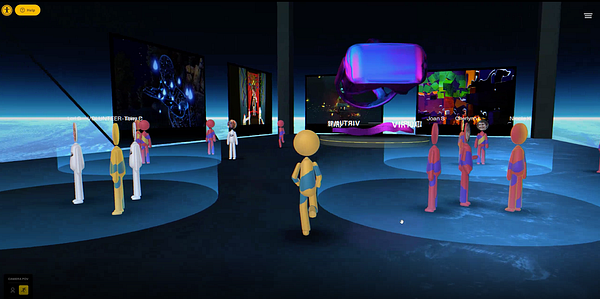

Film Party

Film Party was the social hub of the experience. Users could explore the environment and enter proximity-based chat bubbles with other attendees. It featured a large communal space with smaller, more intimate breakout rooms. To enliven the atmosphere, we integrated original compositions by film composers and added flora to visually anchor the room. Users could also access a running list of attendees present in the room in the side menu to network and connect.

The Film Party as both early design iterations (left) and final production build (right)

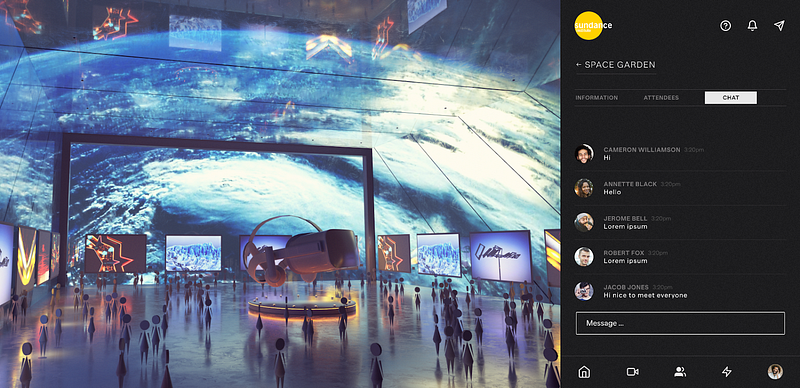

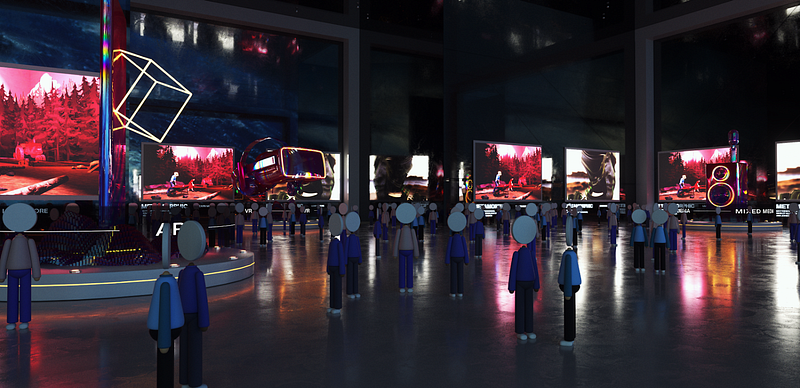

Gallery

The Gallery displayed the full slate of New Frontier experiences within a single room. Attendees could walk around to view the projects, each represented as a standalone showcase, and interested users could select an exhibit to learn more (via link to an external URL).

Visually, we wanted to elevate the art as much as possible. We decided to include floating 3D models throughout the room and make the space larger than the Film Party to recreate that feeling of visiting a real gallery.

Different design experiments and final production build (bottom-right)

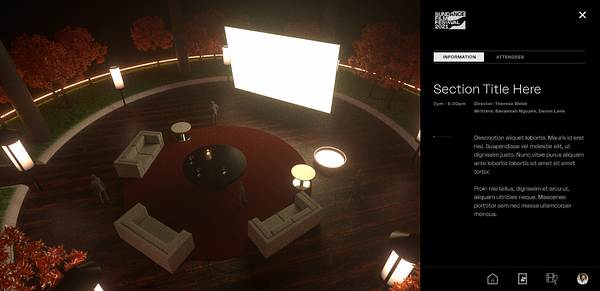

Cinema House

Unlike the other rooms, the Cinema House was only available through VR. In addition to watching film screenings, attendees could wander around and socialize as they could at the Film Party.

Early design explorations and final execution (right) of Cinema House

UI and Navigation

As in some of our other Dreamwave events, we used a side-panel UI for functional navigation. This made it easy for users to navigate the experience while maintaining a significant canvas for interactive 3D content.

Performance: Managing Avatar Animations

On the Web

Animating the avatars presented a big challenge, as it would have been a very slow process to independently render and skin animations for each avatar. To avoid the risks to performance, we needed to render the avatars as instances from the same geometry and then use animations inside a single shader.

Furthermore, the avatars’ movement was based on the idea of keyframe baked animations. Our technical artists generated different behaviour animations (like walking or sitting down) before exporting the position of each vertex from the character for the duration of all the animation. The export process provided a JSON file with all the vertex data for each frame.

We then loaded and converted the animation files to floating point textures, which we used to modify each character pose on the vertex shader using blending functions to decide which animation to display, depending on how the user interacted with the environment.

This allowed us to have a single modular shader that rendered all the avatars as instances, onto which different animations could be applied. This greatly improved performance because regularly skinned animations would have required multiple matrix multiplications, whereas the baked animations only required a single texture read for each vertex of the mesh.

Baked animations reduced performance bandwidth, improving the experience.

In VR

The baked animation concept worked well for web, where users could decouple the movement of their avatars from the camera through a third-person POV. VR posed a different challenge, however, as the user needed to interact with the environment from in the first-person.

To manage this, we used teleporting avatars in VR. Users could use their hand controllers to trigger a teleportation and move the avatar to the new position. This created another challenge, though, because it introduced the chance of avatars landing in places they shouldn’t be (e.g. walls, screens, tables).

To account for this, a 2D collision system validated whether users were trying to teleport to a reachable location in real time. If the area selected was reachable, a blue beam appeared to communicate to users that they could teleport there; if not, the beam displayed red.

Prototypes and final execution (right) of moving around the space in VR.

Chat Bubbles

A common challenge across our Dreamwave events is encouraging social interaction among attendees, and Chat Bubbles were a natural solution for New Frontier. Additionally, because of the ubiquity of video conferencing during the pandemic, we incorporated video chat to further personalize conversations.

To chat with others, users could navigate their avatar next to another attendee, either by walking on web or teleporting on VR, and accept a prompt to start a chat. This created a small bubble around the participating users to launch an audio or video chat. Users within the bubble could then chat with one another, easily jumping in and out as needed. To build in safety and social etiquette, we made the conscious decision to give users the option of accepting or denying to chat.

We also built accessibility controls into the platform to support everyone in engaging with the experience — a big priority for both Active Theory and the Sundance Institute. This resulted in some entertaining ‘party-like’ social interactions as groups automatically accommodated newly joined members, and some members had to wait to enter the conversation at an appropriate moment. Just like a real party!

The chat bubbles were built on top of WebRTC, which enables audio and video chat capability in browsers. Since all avatars were batched and rendered together, the video feeds were composited as squares in a large texture atlas that was then mapped to the “face” of each avatar, so users could experience as real of a human connection as possible within the virtual experience.

Chat bubbles enabled audio and video chats between attendees

Hand Tracking

Through other VR projects, we’ve found hand tracking to be very social and enjoyable for users. With this in mind, we pushed to include VR hand tracking in the build. This was only available in VR — though web users could see a VR users’ hands moving, they couldn’t control their own.

Hand tracking animations can be broken down into two different cases: your own avatar’s hand visualizations and the arm movements of other users’ avatars.

For your own hands, the application used VR controller position and orientation to render ghostly white hands to give a sense of physical self-awareness.

Animating the movements of other users’ VR avatars was more of a challenge, as arms needed to be animated in real time (requiring rigging animations and a kinematics system resolving arm position based on VR hand controller position). To manage performance, we needed a solution that allowed the GPU to work on a single shader to reach all avatar instances, which meant that a regular skinning animation wasn’t possible.

To solve this, we used an algorithm that predicted arm positions and orientation from the VR controller data. A shader then used a FABRIK solver to generate a set of segment paths that worked as rigging bones to deform the arm geometry. For skinning, the shader influenced the topology of the arm (which is defined as a cylinder) and bent it to its required pose, reallocating the vertices of the arm relative to the new axis of the cylinder (which is defined by the segment paths calculated with inverse kinematics).

Go Live

The New Frontier experience was live from January 28 — February 3 2021, and attracted thousands of attendees who hung out at the Gallery and Film Party. It was covered in leading publications, including Engadget and Vanity Fair. For more highlights from New Frontier and other Dreamwave events, check out our Dreamwave website.