The Field

At Active Theory, we love building web experiences that tell an engaging and interesting story. In early 2021, we were contacted by The…

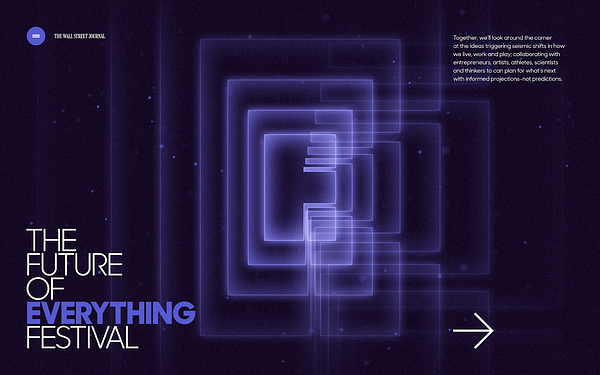

At Active Theory, we love building web experiences that tell an engaging and interesting story. In early 2021, we were contacted by The Wall Street Journal Custom Events team about creating an immersive and social activation for their upcoming Future of Everything Festival.

The Future of Everything Festival, which ran from May 11–13 2021, featured speakers including Ray Dalio, Paris Hilton and Whitney Wolfe Herd, and explored themes shaping the future of humanity and society.

As part of this, we created The Field VR — an audiovisual VR and web experience that took festival attendees through a series of immersive stories highlighting environmental sustainability and mental health and wellness.

Early Ideation

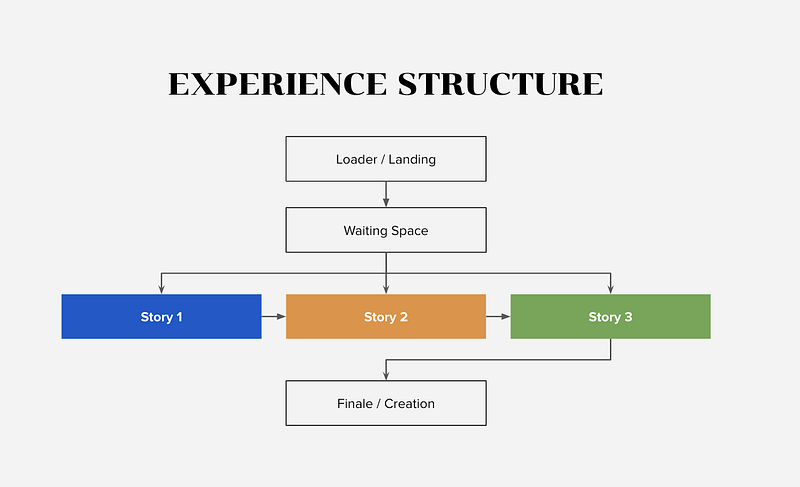

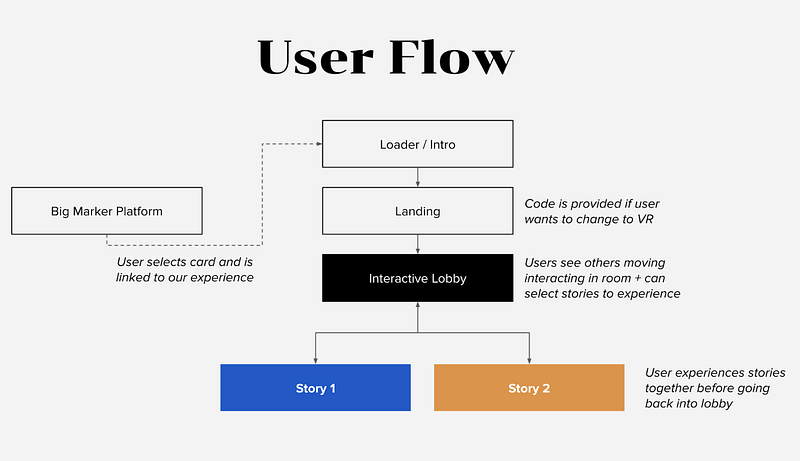

For users, the initial concept was a fairly linear experience — they would journey through a series of brief interactive stories before a shareable finale. Note: we rapidly evolved this idea into a more open lobby, with branching stories, to allow for social interaction and user freedom, more on this later..

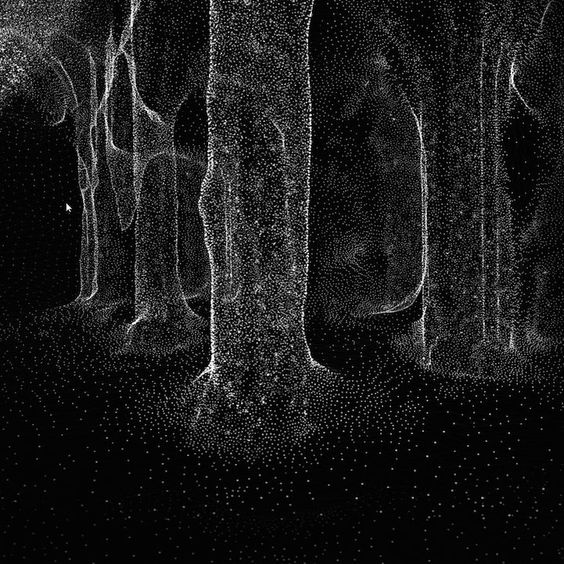

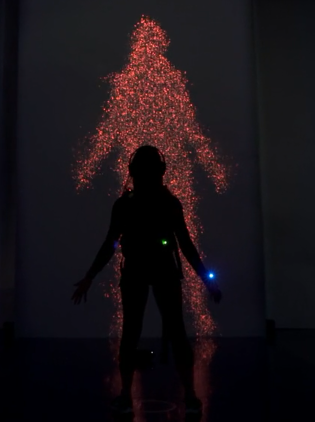

Visually, the stories would be brought to life in immersive 3D environments using a unique aesthetic combining 3D brush strokes and LIDAR-inspired particle systems. These visual systems were loosely based on previous work we did with Droga5 and Thorne Supplements in 2019, but were evolved for this new storytelling context.

The visual direction would combine 3D brush strokes with particle systems.

To illustrate the concept directly, we developed some initial style-frames to showcase a story selection and storytelling screen.

Initial style frame to demonstrate the concept

Adding the Social Lobby

Given the Future of Everything Festival was an event live for 3 days, we knew website traffic was going to be concentrated across a short period of time, with a specific set of audience attendees. With this in mind, there was a push to include a social, networking component to the experience.

To account for this, we reworked the user flow to add an interactive lobby section in which users could audio chat together and move around to select stories. This added some flexibility to our previously linear flow, and gave us a new lobby environment to define.

Matching the overall art direction of the experience (and aligning with the Future of Everything festival theme), the lobby was to be an interactive galaxy of particles, with users represented as a collection of dynamic particles that would fly around the galaxy — above in the air for desktop/mobile and within the galaxy for VR users.

Initial visuals demonstrating the galaxy and a VR users perspective.

The avatar itself would be an evolving cluster of particles, changing colors as users progressed through the stories. For VR, clusters would form around the user’s headset and hand trackers to give a sense of form to the user.

Visual inspiration for the lobby and user avatars within the galaxy.

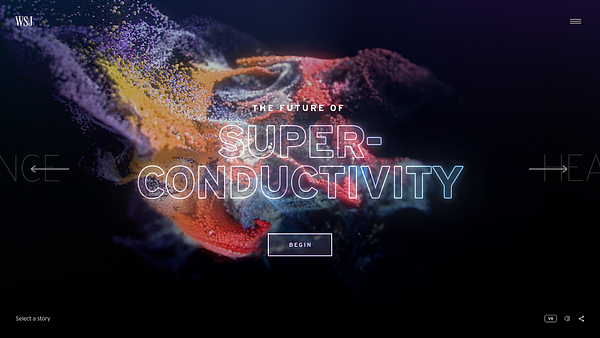

Branding and Visuals

Our approach, from a visual standpoint, was to create a gallery-esque format. A minimalist aesthetic was always going to be key. This is why the interface and typography are extremely clean and stripped back, allowing the galaxy within the lobby and the particle forms that exist throughout the stories to be undisturbed during storytelling.

Early design exploration

The overall design direction was based around space and time, using the vibrant tones that would exist within nebula formations and movements that would feel as if you are traveling through a space-time warp, or into an alternate dimension.

A key design challenge was designing screens that work in both a web and VR context. As we typically design on 2D screens, we had to make a lot of conceptual leaps and descriptions of the VR experience, as trying to represent it on a designed screen wasn’t particularly feasible. To account for this, we got into development very quickly to start demonstrating the VR UX in a hands on way, rather than overcook things in 2D design.

‘The Field’ ultimately became the name of our experience. Within the landing, we represented the text through a series of movements that loosely reflect how the Earth’s magnetic field reacts to natural effects like wind and currents.

Stories, Scripts and Scenes

From the main galaxy lobby, users could select from one of two stories. Each of these stories would take users through a 5–10 minute personal audiovisual journey. Thematically, both stories explored the future of society; firstly around the environment, and secondly around our inner selves.

Story 1 — World on Pause

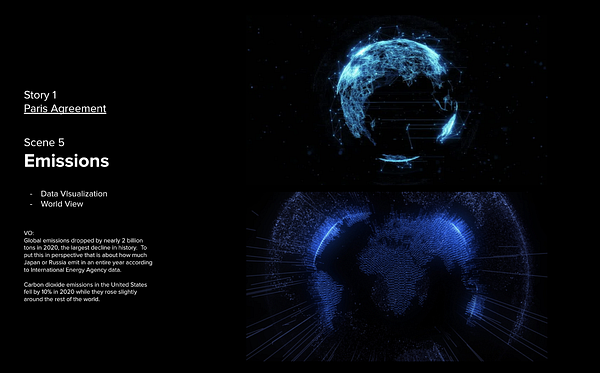

The opening story, World on Pause, explored the impacts of the COVID-19 pandemic on the environment. It discusses the effects of reduced transit around the global economic slowdown and how it could be used as a data point for the future of sustainability. On receiving the script, we created a series of scenes (each planning to last between 10 and 20 seconds), with each scene having some visual theme relating to the script. World on Pause consisted of seven scenes; traffic, rain, oil markets, environment, emissions, air and water, reflections.

Planning the visuals for scene 1: traffic and scene 5: emissions.

Story 2 — Sensory Reboot

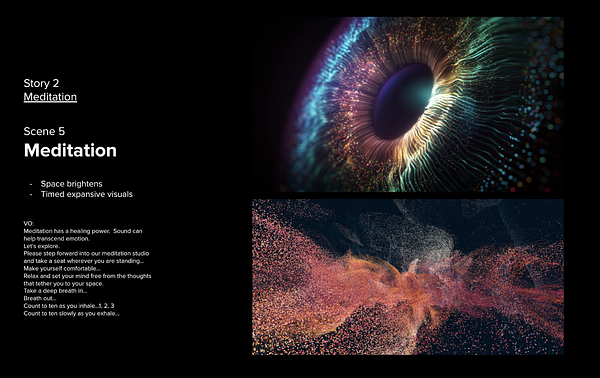

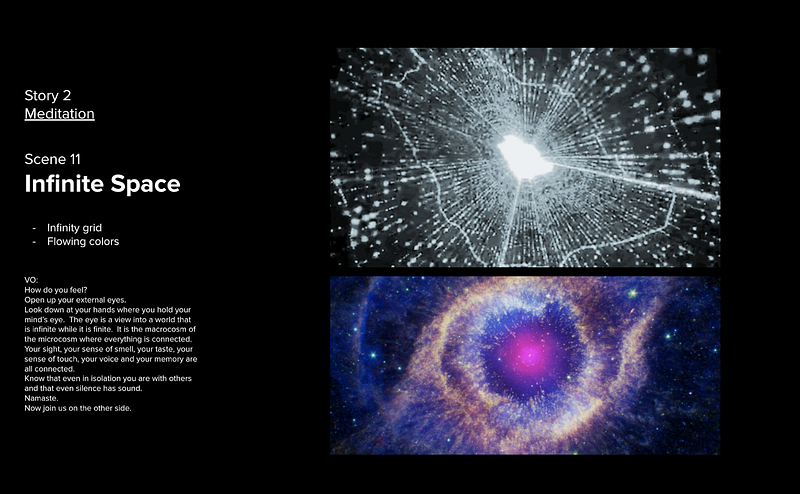

While story 1 explored the external world, scene 2 took us in another direction to explore the world inside us. At a slightly longer length of around 10 minutes, story 2 sets a calming stage before guiding users through a meditation exercise. The 11 scenes of story 2 include senses, how memory and smell are intertwined, illusions that the brain constructs, transition, an immersive meditation, enhanced by vision orbs, spatial expansion, chakra inspiring sound, nebula, affection and space time contraction.

Planning the visuals for scene 5: meditation and scene 11: space

Creating the Stories

With the scripts and visual references defined, we moved into development. For long scripted sequences such as this, we use a custom plugin that allows artists to animate scenes inside of Cinema4D and stream the results directly into the browser. This allows for fine control over particle choreography and effect animations, as the artist can simply scrub back and forth along the Cinema4D timeline to make sure their animation syncs up with the soundtrack. When done, the plugin exports the animation to a compressed format, and the exact sequence the artist designed in Cinema4D is reflected in the final build.

Visual Approach

To construct and choreograph these elaborate particle scenes we used our in-house particle system generator, Proton. Proton allows both artists and developers to spin up complex FBO particle simulations with minimal effort. It provides artists with a UI panel for modifying values at runtime, and a code interface for defining particle behaviour, similar to what you might find in a program like Unity.

For the lidar scenes, we created and exported point cloud data using Houdini. With this technique, we were able to project points onto previously created meshes based on an emitting sphere position, which gives much more interesting results than a uniform point scatter, especially in terms of depth.

Shadows and Lighting Effects

To push the stylization of these particles even further, we integrated a new feature into our particle system generator that would allow for real-time volumetric shadows. The implementation is based on the Volumetric Particle Shadows paper from Simon Green. The idea is to allocate the particles in a 3D texture from the main light point of view (where the different layers of the texture accumulate the shadow contribution from the previous layers), making shadows work in a progressive way. The shadows from each particle are then defined in the layer’s voxel position after the accumulation pass is completed. The same blur used for scattering effects was used to soften shadows with a penumbra effect that reduced the harshness from directional shadows.

Light scattering effects applied a blur pass over the layers — with the contribution being modulated with an amplitude scale. A challenge of this approach is that it only works for directional lights (like sunlight) and given 3D textures are defined for a limited volume space, we needed to account for particles moving freely everywhere.

To overcome this, we normalized the position of the particles by using a bounding box to contain them. This was done using reduction techniques (similar to mipmapping but checking conditionals instead of average values) keeping all the calculations in the GPU.

Particle Movement

Several of the scenes, such as the scene describing the Crown Chakra, needed particles that would flow along spline pathways. To achieve this effect, we used Proton’s existing spline particle functionality, which has the ability to accept an array of spline paths and automatically animate particles along them. To get the spline data into the scene, artists use one of our exporters for Cinema4D or Houdini, and plug the resulting JSON into Proton to see the new particle effect they’ve created. Since particles can either stick very closely to splines to create ‘lines’, or spread far away from the spline center to create more of a flowing stream effect, we’ve found spline particle movement to be a very versatile choice when composing these scenes.

Audio Chat in the Galaxy Lobby

Between stories, users were taken in a holding room/lobby space to hang out and chat with each other. This feature worked across all devices, meaning users in VR, desktop or mobile could all move around and chat with each other.

Given this was a semi-networking experience at the Future of Everything Festival, we wanted to have a way for users to have individual conversations (as opposed to one big conglomerate). To allow for this, we used proximity based audio — adjusting the levels of user audio to hear the particle avatars closer to them. This allowed for users to move around the space together and find a location to have their own conversation.

As desktop users were floating in the sky and VR users were on the ground, we wanted to find a way to connect the two to facilitate proximity based audio. To account for this, users on desktop could zoom in closer to the ground to be right next to the VR avatars and start a conversation across devices.

To manage this in development, we used distance between avatars to define a doppler effect as users moved closer to, or away from, each-other. This would communicate to a remote media stream that would modify sound levels based on proximity.

To enhance the sensory experience, we also enabled a “high five” functionality that triggers the haptics of the controller, making it vibrate when two users shake hands in VR. Just like a real high five, this was triggered based-off the distance between the hand controllers of the avatars.

Live at the Future of Everything Festival

From May 11–13 2021, we went live at the festival. Over the course of the event, over 3000 attendees took to The Field, experiencing our web/VR stories and connecting in the networking galaxy from 42 countries. Attendees spend on average 3:06 minutes in the space and some returned multiple times over the course of a few days. Thanks to the amazing team behind WSJ Custom Events for putting this together. While it’s no longer live at the festival, The Field can still be experienced here.