The Frontier Within

Storytelling with biometrics across physical and digital spaces

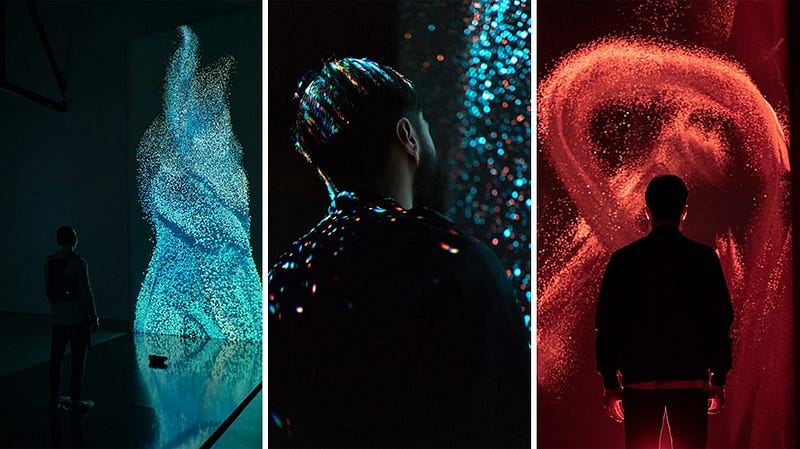

The Frontier Within is an immersive installation and web experience that captures the participant’s circulatory, respiratory and nervous system data, and transforms it into a living, breathing, interactive portrait of the body.

It was live as a physical installation at Studio 525 in New York City on June 21–22 2019, and continues to be available online.

The Vision

The Frontier Within is a promotional campaign from Thorne: a health supplement company that develops high-grade, innovative formulations. It aims to get people thinking about their “inner self”, that is, the parts of the body we don’t often think about — our lungs, our brain and our heart.

Holistically, the campaigned exists as an interactive digital installation, a web experience and a film that tells an artful story driven by science and data.

How well do you really know yourself? Look beyond the surface and you’ll discover a whole world within that’s more complex and beautiful than you could ever have known…

For us, The Frontier Within project presented an opportunity to work towards a long term passion point; using web technology to create an experience that bridges the gap between digital and physical. Our mantra of “taking the web outside the browser” in this case meant bringing it to an 18-foot monolith 4K projection in an art gallery in Chelsea, Manhattan.

The Plan

At SXSW 2019 we connected with Droga5 and learned about their vision to create an art exhibition commissioned by Thorne Research that explore the invisible processes within every human being.

While the creative itself was intensely intimate, a strategic priority was ensuring it was widely distributed and could reach as many users as possible. This led to the solution of separating the campaign into three intertwined components: a film, a physical installation, and a web experience. These three channels were interconnected in terms of creative direction and story, and importantly, this setup allowed us to implemented a software solution across the physical installation and web experience that shared the same codebase.

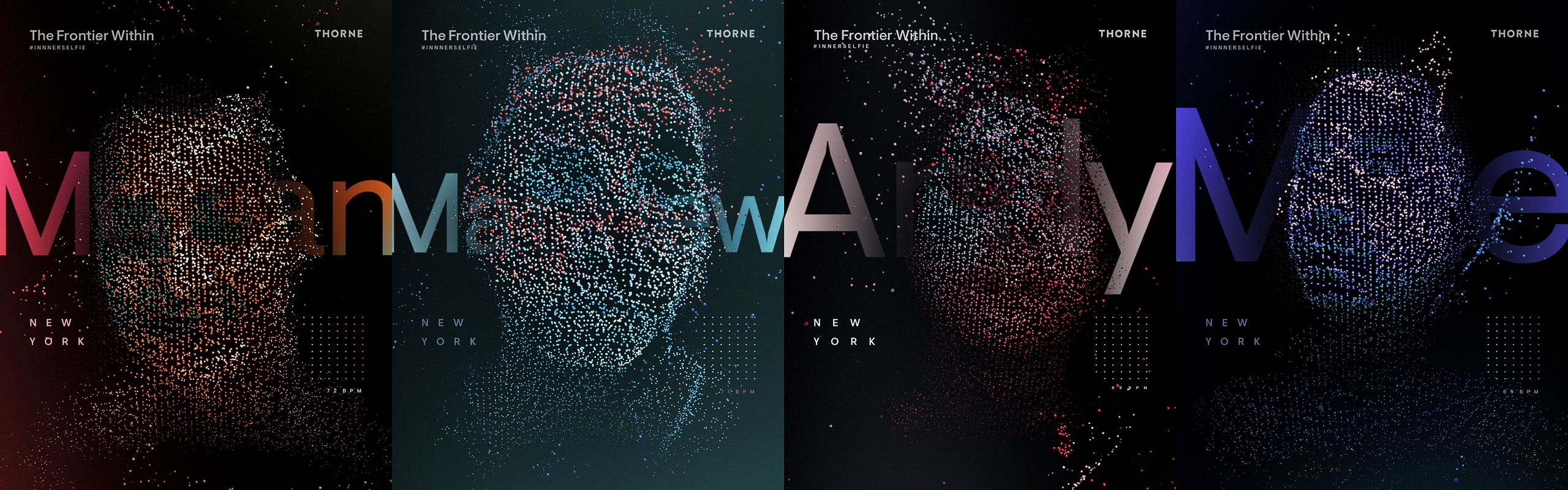

With this approach in mind, we quickly teamed up with Marshmallow Laser Feast who led creative across film and physical, and Plan8, who designed sound across all channels. Working together, a three chapter story that explored the lungs, heart and nervous system was developed. The story builds up to a big reveal moment at the end where the user would experience their ‘inner selfie’ This would then be made available to them to take away and share on social media.

The Tech

Given MLF’s previous particle work and stunning film spot, the bar for visual quality was extremely high. We had to develop a series new tools and techniques in order to reach expectations. We started off with a foundation of existing tools:

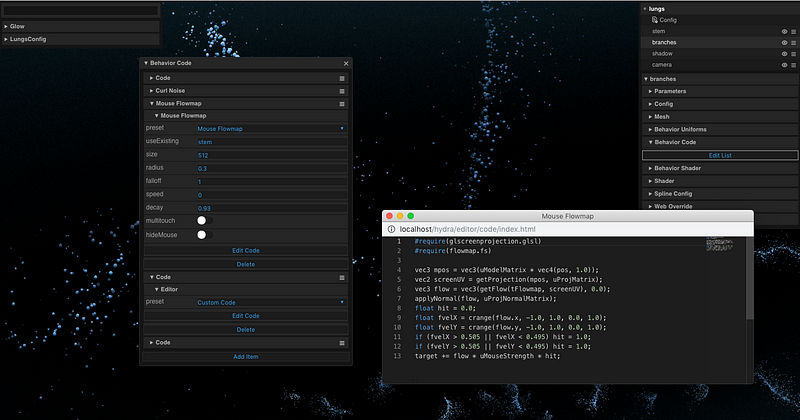

- Hydra framework + 3D engine

- Aura for the installation renderer (Windows app)

- GUI toolkit for live editing

- Our GPU particle engine

While our tech article goes deep into the inner workings of the above, a few key moments stood out during development:

- We created an optimized rendering path for rendering single quad geometries such as GPU particle simulations and post processing, reducing CPU overhead by 50% in these unique scenarios which directly led to more particles on all devices.

- Aura as a desktop app proved to be key for smooth rendering. No matter how performant code is, the browser could decide to perform a background task at any moment, causing a micro-stutter. While mostly unnoticeable on a small screen, any stutter on an 18 foot screen would be glaringly obvious.

- The GUI toolkit turned out to be a life saver based on how quickly we could edit the tons of shaders for both particle motion and visuals. Its underlying WebSocket server meant we could live edit from a laptop and see the changes in real time on the big screen.

Particles

MLF’s creative direction was crystal clear: it’s all about the particles. Millions of them each with unique properties that, together, comprised a recognizable and accurate system within the body.

In order to push particles further than we had before, we needed tools and abstractions that would do the heavy lifting and get us from point A to B quickly. We started by building a new layer on top of our existing GPU particle engine, which loosely represented a node-based system enabling us to add “behaviors” to each particle system and quickly edit shader snippets in-browser.

We needed to be able to create complex motion that essentially became a recognizable shape. MLF were creating the film and prototyping in Houdini which was creating intricate fluid simulations. Knowing that the scope of our work was to develop a build that could scale from a mobile phone up to a powerful gaming computer, we had to find a clever solution.

The idea we came up with was to encode the motion of particles into data that could be looked up by the GPU and move each particle with realistic velocity. In practice, this worked by using Cinema4D to create individual splines comprising a shape and then animating a single particle from the beginning to end accounting for velocity.

Once initialized in the real-time engine, millions of particles are distributed amongst each spline within the system. Each particle has a “life” value which is a 0 to 1 value that determines where along the spline to look up its position. From there, it’s just a matter of using randomness to offset the particle to create “thickness” and apply different parameters such as speed multipliers and curl noise to create complexity and richness in animation.

We created a proof-of-concept which demonstrated the concept in action and showed it as a viable way forward in the project.

However, we quickly realized that creating abstract particle flows was much easier than creating complex system such as lungs. We continued to iterate on our particle behaviors, enabling more control such as spawning particles in multiple groups and more attributes such as friction and additional layers of noise to arrive at the final particle system.

In final polish we refined color shift based on particle velocity, particle self-shadowing, motion blur, and depth of field.

The Installation

For the physical build, we worked with MLF and Plan8 to integrate a number of sensors into the experience. Each guest was fitted with a Subpac that contained a heart rate sensor that clipped on ear, headphones, and a breath sensor on the front strap.

For lungs, the brightness of the particles was directly linked to in and out breath motion. The heart-rate collected from the sensor set the temp of the heart particles and the Subpac would vibrate with each heartbeat. On the nervous system, motion data collected from a Kinect placed in front of the user triggered particles to fire off streams of light and color to represent synapses.

The final chapter of the installation combined the depth camera, color camera, and skeleton data to create optical flow. Particles spawned creating the outline of the person and began to flow in the direction they were moving based on calculating velocity from each joint within the skeleton data. For an additional level of richness we rendered a fluid simulation from skeleton data and used the velocity of the fluid to affect the particles.

The Web

The underlying key component to the entire experience was that it needed to be accessible on the web. Throughout the entire creative and development process we kept the web in mind and shared the code between any overlapping components.

In order to scale for performance from older mobile phones up to the top of the line installation rig we created a series of tests that scaled the number of particles within each system and the post-processing effects layered on top. This way, the installation rig would render 5 million particles while a 5 year old mobile phone would render 50 thousand, all without any additional code required in the project itself. We detected which GPU a device had and selected from 6 tiers of performance, scaling the number of particles accordingly.

Since the experiential event used physical sensors, we needed to come up with a solution to tell the same story on the web using the device’s camera. For heart rate we isolate the green channel data from the image of the user’s face and detect subtle changes over time; a technique developed by MIT and described in this video. For the nervous system motion, we simply look at how many pixels have changed frame over frame to create a range of activity.

We developed a custom C++ OpenCV project for accurate face tracking that compiled to WebAssembly for use in browser. In addition to being able to track the face for heart-rate detection, we were able to create a simplified optical flow using the velocity of the tracked face to add a layer of interactivity to the web end screen.

Sequencing

When it came time to put the entire build together into an end to end experience MLF suggested a sequencing workflow that they had used in the past. We used Cinema4D’s timeline to animate dozens of unique properties in each chapter. From camera movement to colors to individual shader properties, we streamed data live from C4D into the browser to create an editorial workflow. Once editing was finished, a JSON file was exported which is loaded at run-time and plays back the stream of data.

Backend Systems + Server Rendering

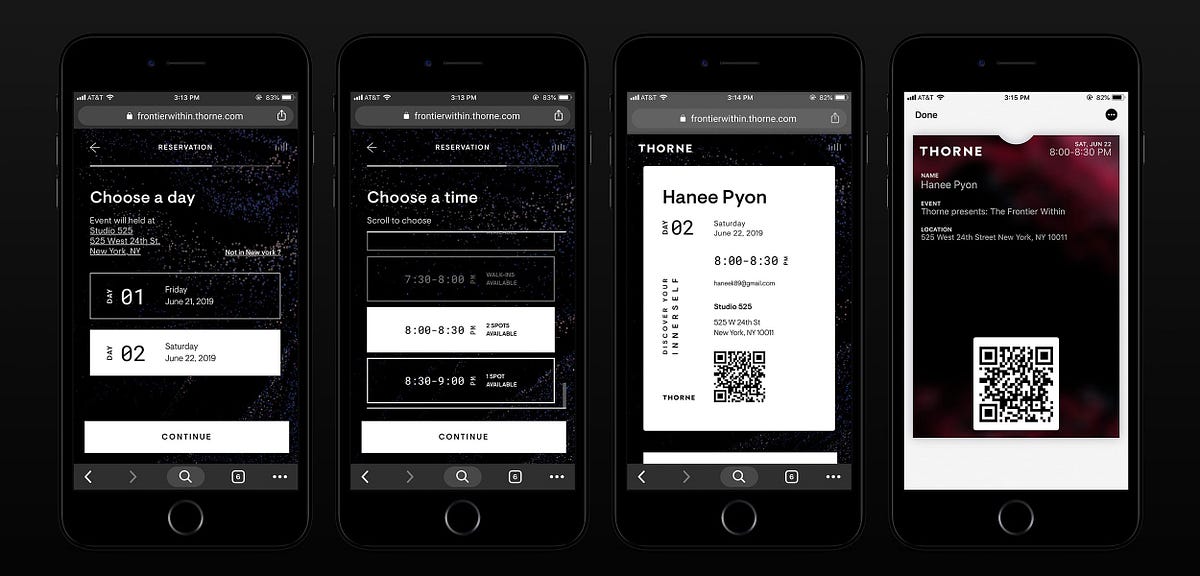

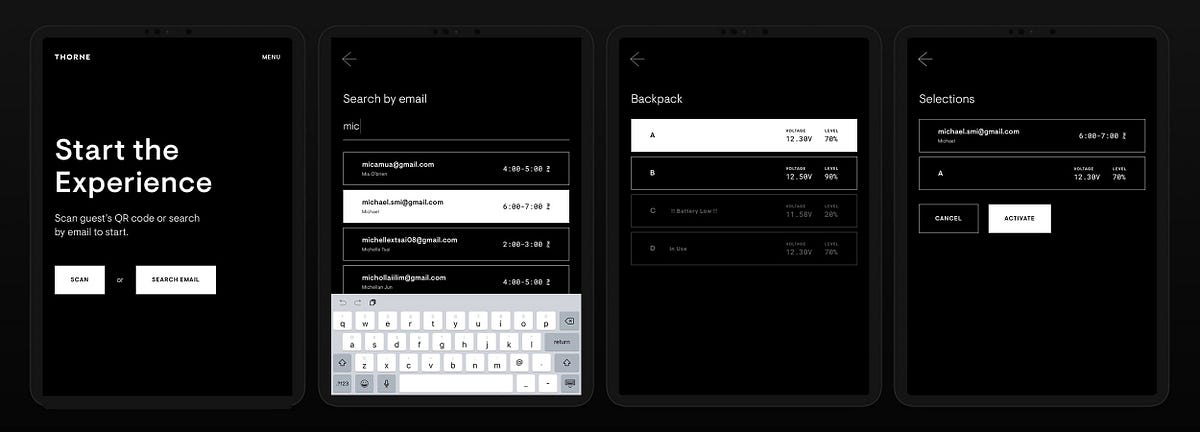

Reservations and Attendance: An online reservation system was developed for invited people to reserve a predefined slot for the experience. Firestore and Cloud Functions was used to securely manage reservations and display slots in real-time. Passes and QR codes were generated and issued for Apple Wallet and emailed to users to bring to event. A web-based administration system was setup to monitor reservations, video render queue, users checking in and completing experience.

Onsite Support and Management: A web-based system was developed for docents on-site to; view list of reserved users, view list of checked in users, scan QR codes, check in and check out users, view battery status of backpacks, assign backpack and activate the experience. A web app would display the latest stats on an iPad for users who just finished the experience to review.

Moderation: Selfies that were submitted were passed through Cloud Vision for moderation and only accepted if a face was detected. Face detection was utilized to position and crop selfie images in position for the share reveal.

Servers: A Node application with dedicated hardware was developed to communicate data from multiple sensors through to the installation experience with minimal latency. This server also communicated to other systems to trigger video to generate, accept commands from scanner application to start/stop the main experience. Once the experience has finished, a series of servers on Google Compute Engine were setup to render personalized selfie videos utilizing cloud-based GPU’s. We achieved this by configuring GPU enabled servers and running custom native application that was able to render WebGL on the cloud and a queuing system was setup to manage the workload.

Sharing: We created personalized videos for users to share their inner selfie. Once video had been generated, it is emailed directly to the user as a video attachment so user is able to easily save the video to their camera roll and able to upload to Instagram.

Server-side render of shareable asset