World Brush

Download World Brush for iOS

With Google and Apple making major announcements about Augmented Reality in the past few months, it became very clear that AR was going to make it’s way into the lives of millions of people. Active Theory primarily works with advanced web technology such as WebGL to develop immersive experience sites (ex. Pottermore’s Explore Hogwarts), and we wondered if we could bring our tools, frameworks, and experience into this new frontier.

Since WebGL is a platform built on OpenGL (a native graphics implementation that powers millions of apps and games), we were able to create a native app platform that allows us to write the same JavaScript code that we use on the web and render graphics in OpenGL within a native iOS or Android app and take advantage of ARKit and ARCore.

Earlier this summer, we created a proof of concept experiment. Everyone at Active Theory is driven to use technology in creative ways, which has primarily been client work, but the opportunity to create products using Augmented Reality compelled us to begin thinking about our first offering.

Introducing World Brush

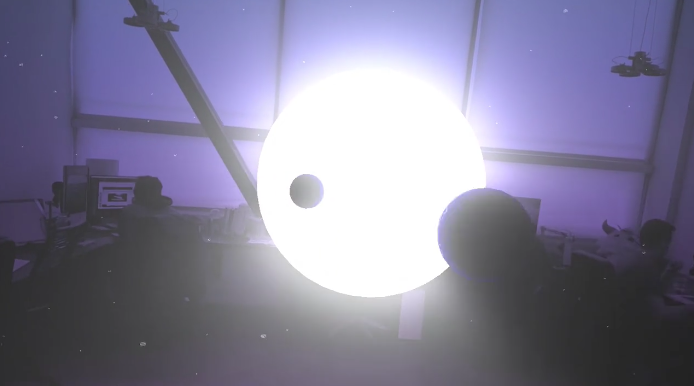

World Brush is an AR experience that lets users paint on the world for others to discover. Every painting is anonymous and only visible where it was created. Users have the ability to like, dislike and report paintings which helps hide the bad stuff and showcase the good stuff for others.

The idea stemmed from wanting to merge the physical and digital world in a way that was simple for any user to jump in and experience. It was easy to imagine people walking along Venice Beach and seeing virtual art appear around them anchored to real objects. A simple drawing mechanic tied to the real world allows for a huge number of possibilities that transcends language barriers and fits within the context of each person’s unique experience.

We decided early on that World Brush would be a simplified tool and would not make users feel overwhelmed with options. From first launch, users can simply draw on their screen and see the line they are creating 1 meter in front of them. As the user begins to unlock additional brushes, simple sliders appear to change the line color and thickness.

Technology

Aside from the native code that provides the platform, all code specific to the World Brush application is written in JavaScript. The main AR portion of the experience, including the lines and user interface, is rendered in OpenGL using the three.js library. The brush purchase and map screens are standard web elements contained in a WebView layer that appears when triggered by a user action.

Rendering Lines

We used previous experience to create a new line rendering pipeline within our framework and three.js. As a line is drawn data is stored containing the X, Y, Z position in space, line thickness, and color among other attributes.

Placing Paintings in the Real World

ARKit and ARCore provide tracking data relative to where the phone is in space when the app is opened. In order to place previously drawn paintings in the world, we orient the rendered scene with the compass so no matter what the device’s origin the drawings appear around it contextually in the same direction they were drawn.

In testing, we were able to pinpoint the user’s location based on GPS to about 10–20 meters, so within that margin of error a painting may appear in offset a few feet in position, but should still be facing the correct direction.

Optimization

As the user draws, each individual line is rendered as a separate mesh with it’s own unique geometry captured from the painting motion. When a session is saved or a remote painting is loaded, all the individual lines within that session are merged together in a single geometry and draw call in order to reduce the overall number of draw calls and allow users to create larger and more elaborate paintings.

When a painting session is loaded, it is merged on a separate background thread utilizing a custom native implementation of WebWorkers, preventing the main experience from freezing temporarily while heavy math calculations merge paintings together.

Server-Side

We combined Google’s new server-less cloud functions, and our Medusa server-side framework to save and retrieve painting data which is stored in Google’s Cloud Storage which enables us to fetch data in milliseconds.

Painting session metadata such as location and time of creation is stored in Firebase which made it simple to retrieve only a subset of paintings that were saved at the same GPS coordinate a user is physically at.

We also implemented a scoring algorithm that analyzes painting popularity and time of creation in order to serve users the highest quality content in popular locations that may have a large number of saved paintings.

Going Forward

We’re excited to see what this new foray into Augmented Reality brings. We are always eager to take complicated technology and make it seem simple to the end user.